Marking centrally managed exams in 2021

By Steve Rowett, on 22 March 2021

On this page:

- Background information about UCL’s approach to centrally managed exams for 2021

- Options for marking student-submitted papers, with walkthrough videos and links to further information

- Guidance for external examiners viewing papers and marks

- Some evidence of online marking with hints and tips to make it easier

- Details of training sessions and help and support available during the exams period

Please note that this page will be updated regularly.

- 19 April 2021 – details of daily drop-ins added

- 23 April 2021 – clarified that the training and drop ins are relevant to markers, moderators, the Module Lead and Exam Liaison Officers.

- 5 May 2021 – link to recording of marker training session added (UCL staff login required) or view the slides for the training session.

- 14 June 2021 – guidance for external examiners using AssessmentUCL added.

- 11 August 2021 – Late Summer Assessment (LSA) training and drop in information added.

Background

As part of UCL’s continued COVID-19 response, centrally managed examinations for 2021 will be held online. Approximately 19,000 students will undertake over 1,000 exam papers resulting in about 48,000 submitted pieces of work. These exams are timetabled, and (for the very most part) students will submit a PDF document as their response. Students have been provided with their exam timetable and guidance on creating and submitting their documents. The exception to this is some ‘pilot’ examinations that are taking place using other methods on the AssessmentUCL platform, but unless you are within that pilot group, the methods described here will apply.

The move to online 24 hour assessments that replace traditional exams leads to a challenge for those that have to grade and mark the work. This blog post updates a similar post from last year with updated guidance, although the process is broadly the same.

Physical exams are being replaced with 24 hour online papers, scheduled through the exam timetabling system. Some papers will be available for students to complete for the full 24 hours, in other cases students ‘start the clock’ themselves to take a shorter timed exam within that 24 hour window.

We start from a place of two knowns:

- Students are submitting work as a PDF document to the AssessmentUCL platform during the 24 hour window; and

- Final grades need to be stored in Portico, our student records system.

But in between those two endpoints, there are many different workflows by which marking can take place. These are set out by the UCL’s Academic Manual but encompass a range of choices, particularly in how second marking is completed. One key difference between regular courseworks is that this is not about providing feedback to students, but instead about supporting the marking process, the communication between markers and the required record of the marking process. At the end of the marking process departments will need to ensure that scripts are stored securely but can be accessed by relevant staff as required, much in line with requirements for paper versions over previous years.

There is no requirement to use a particular platform or method for marking, so there is considerable flexibility for departments to use processes that work best for them. We are suggesting a menu of options which provide a basis for departments to build on if they so choose. We are also running regular training sessions which as listed at the foot of this document.

The menu options are:

- Markers review the scripts and mark or annotate them using AssessmentUCL’s annotation and markup tools;

- Departments can download PDF copies of scripts which can be annotated using PDF annotation software on a computer or tablet device;

- Markers review the scripts on-screen using AssessmentUCL, but keep a ‘marker file’ or notes and comments on the marking process;

- Markers print the scripts and mark them, then scan them for storage or keep them for return to the department on paper.

The rest of this post goes into these options in more detail. There is also a growing AssessmentUCL resource centre with detailed guidance on exams, which will be launched shortly and this will evolve as the AssessmentUCL platform becomes more widely used across UCL.

Overview of central exam marking

This video provides a short (4 minute) introduction to the methods of marking exam papers in 2021. This video has captions available.

Marking online using AssessmentUCL’s annotation tools

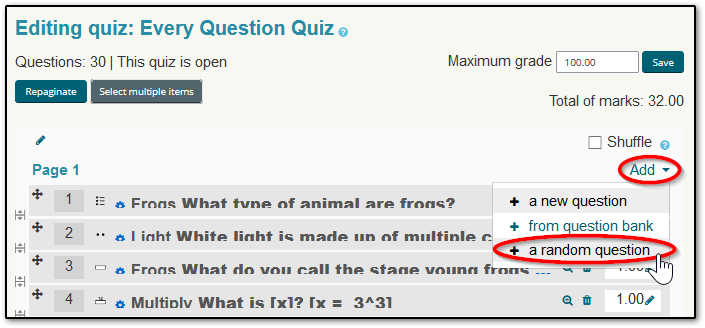

AssessmentUCL provides a web-based interface where comments can be overlaid on a student’s work. A range of second marking options are available to allow comments to be shared with other markers or kept hidden from them. The central examinations team will set up all centrally managed exams based on the papers and information submitted by departments.

The video (24 minutes) below provides a walkthrough of the marking process using the annotation and grading tools in AssessmentUCL. It also shows how module leaders can download PDFs of student papers if they wish to mark using other methods or download marks if they are using AssessmentUCL. This video has captions available.

Guidance for external examiners

This video provides guidance for external examiners who are using AssessmentUCL to view papers and marks. This video has captions.

Annotation using PDF documents

Where you annotation needs are more sophisticated, or you want to ‘write’ on the paper using a graphics tablet or a tablet and pencil/stylus, then this option may suit you better.

Module leads and exams liaison officers can download a ZIP file containing all the submitted work for a given exam. Unlike last year, a student’s candidate number is prefixed onto the filename, and can be included within the document itself, to make identifying the correct student much easier.

You can then use tools you already have or prefer to use to do your marking. There is more flexibility here, and we will not be able to advise and support every PDF tool available or give precise instructions for every workflow used by departments, but we give some examples here.

Marking on an iPad using OneDrive

Many staff have reported using an iPad with Apple Pencil or an Android tablet with a stylus to be a very effective marking tool. You can use the free Microsoft OneDrive app, or Apple’s built in Files app if you are using an iPad. Both can connect to your OneDrive account which could be a very useful way to store your files. An example of this using OneDrive is shown below, the Apple Files version is very similar.

There’s further guidance from Microsoft on each individual annotation tool.

Marking on a PC or Surface Pro using Microsoft Drawboard PDF

Microsoft Drawboard PDF is a very comprehensive annotation tool, but is only available for Windows 10 and is really designed to be used with a Surface Pro or a desktop with a graphics tablet. Dewi Lewis from UCL Chemistry has produced a video illustrating the annotation tools available and how to mark a set of files easily. Drawboard PDF is available free of charge from Microsoft.

Marking on a PC, Mac or Linux machine using a PDF annotation program.

Of course there are plenty of third party tools that support annotating PDF documents. Some requirement payment to access the annotation facilities (or to save files that have been annotated) but two that do not are Xodo and Foxit PDF.

Things to think about with this approach:

- Your marking process: if you use double blind marking you might need to make two copies of the files, one for each marker. If you use check marking then a single copy will suffice.

- You will need to ensure the files are stored securely and can be accessed by the relevant departmental staff in case of any query. You might share the exam submission files with key contacts such as teaching administrators or directors of teaching.

- Some of the products listed above have a small charge, as would any stylus or pencil that staff would need. These cannot be supplied centrally, so you may need a process for staff claiming back the costs from departments.

Using a ‘marker file’

Accessing the students’ scripts is done using AssessmentUCL, which allows all the papers to be viewed online individually or downloaded in one go. Then a separate document is kept (either one per script, or one overall) containing the marks and marker feedback for each comment. If double-blind marking is being used, then it is easy to see that two such documents or sets of documents could be kept in this way.

Printing scripts and marking on paper

Although we have moved to online submission this year, colleagues are still welcome to print documents and mark on paper. However there is no central printing service available for completed scripts to be printed, and this would have to be managed individually or locally by departments.

The evidence about marking online

In this video Dr Mary Richardson, Associate Professor in Educational Assessment at the IOE, gives a guide to how online marking can differ from paper-based marking and offers some tips for those new to online marking. The video has captions.

Training sessions and support

Markers can choose to mark Late Summer Assessment (LSA) exams in the same way as they marked the main round of exams. For a refresher or if you were not involved in marking centrally managed exams, please refer to the recording of one of Digital Education’s previous training sessions (UCL staff login required) and the slides used in the training session which can be downloaded.

Throughout the LSA period (16th Aug to 3rd September), drop-ins will be run Tuesday, Wednesday, Thursday 12-1pm. The drop in session is relevant to markers, moderators, the Module Lead and Exams Liaison Officer. Attendees can ask questions, or see a demo of the marking options. You can find the link for these and join immediately.

You can of course contact UCL Digital Education for further help and support.

Close

Close