Sometimes I hear an announcement about an update or improvement to some technology or ‘app’ (as the youngsters say) that sounds exciting and eminently worth investigating. When I start sifting through Google results to find out more, I can spend a day or two sometimes working out what’s going on. That’s OK, it’s part of my job to do this and then, if what I’ve learned is useful, to find ways to communicate it to other people.

For example, Excel now allows users to create ad hoc and custom functions using lambda(). Now, if you are a computer scientist, mathematician, philosopher or linguist, you will probably have heard of the lambda calculus, an important mathematical invention of the twentieth century that influenced all those disciplines. It provided a way to formally characterise computation as function application and abstraction (roughly, don’t quote me on this – it was a long time ago). Now, even students of computer science may sometimes encounter the calculus and end up wondering ‘OK, but what’s it for?’

Well, one way to demonsrate its practical use is by introducing the world of the lambda() function in Excel: it allows you to formally define new Excel functions. I immediately spotted some uses for this.

Descriptive statistics for sub-populations

Excel provides all the most common and useful statistical calculations as basic functions such as count(), average(), var(), stdev() and for some functions there are conditional versions countif(), averageif(),which allow for subsetting your data. So, it might be that you have two columns of data, the first is some interesting measure (temperature? height? resting bpm?) and the second some characteristic such as ethnicity or gender.

It might be that you want to know what is the average resting heart rate (for example) of the male participants in your study. You can do this using averageif(): =averageif(B1:B100, “male”, C1:C100). Assuming that the sex data are in the range B1:B100 and the resting heart rate data are in C1:100. In fact, Excel has an averageifs() to help with cases with multiple selection criteria. So that’s good for data analysts using Excel because it’s a common analytical approach.

However, this only works for averageif(), countif(), maxif(), minif() – there is no conditional var(), stdev(), skew() or kurt(). Well, for variance and standard deviation, we can construct a pivot table to get the subpopulation analysis we want and that’s great. But occasionally (probably not that often) we want to calculate the skew in resting heart rate for all male participants, and maybe even the kurtosis. And here, after that long lead in, is where the lambda() function proves it’s worth. First, lets look at how we would caculate the kurtosis in some measure as a function of gender, using a combination of built-in Excel formulas: Given a data table where column A is the categorical (eg gender) variable and B is the measure of interest:

| 1 |

A |

B |

| 2 |

1 |

56 |

| 3 |

1 |

62 |

| 4 |

1 |

48 |

| 5 |

1 |

58 |

| 6 |

1 |

58 |

| 7 |

1 |

55 |

| 8 |

1 |

42 |

| 9 |

1 |

54 |

| 10 |

1 |

47 |

| 11 |

2 |

52 |

| 12 |

2 |

59 |

| 13 |

2 |

56 |

| 14 |

2 |

45 |

| 15 |

2 |

63 |

| 16 |

2 |

52 |

| 17 |

2 |

44 |

The formula we want is kurt(if(A1:A17=1,B1:B17))

So there are three parameters, the first is the range to which we apply the second (the selection criterion value (here, 1 = male)), the third is the range where the kurt() function will be applied. I tested this formula and it works fine, it’s just a bit clunky to use compared to the built-in averageif() etc. So, I decided to reconstruct it using the lambda() function, to produce my own kurtif() function. In an empty cell, we put the lambda expression:

=LAMBDA(a,n,b, KURT(IF(a = n, b)))

with the three parameters represented (arbitrarily) by a, n and b. A moments reflection and we see the relation between the lambda expression and our previous Excel formula. From a practical point of view, the lambda expression tells us where in the calculation to plug in the values a, n and b to get our result.

When you enter this expression in a blank cell and hit return you will see the warning message

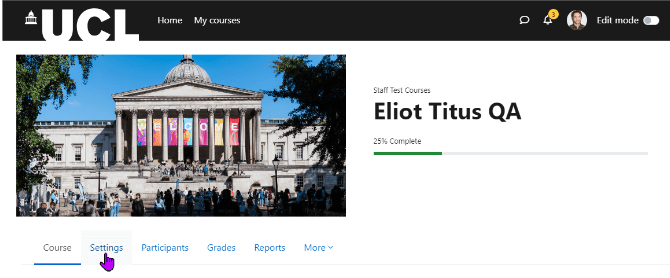

which is Excel recognising that the cell contains a lambda expression. The next step is to name the new function kurtif(). Copy the new lambda expression to the clipboard (highlight and control-c) then from the formula tab on the ribbon select and open the name manager. In the dialog that this opens press the new button; give kurtif as the name for the new function and then paste the lambda expression from the clipboard then OK and close the name manager dialog.

Now you can carry out the computation as:

=kurtif(A1:17,1,B1:B17)

which is simpler and has the advantage of looking very like averageif() and the other, similar functions.

A missing significance calculation in Excel

Excel has a very simple to use function that will calculate a correlation coefficient (R) from two arrays of data: =correl(Array1,Array2). It is a minor annoyance that this calculation doesn’t return a p value for R, allowing us to test the null hypothesis that the true correlation between Array1 and Array2 is zero. So, let’s assume that we have calculated r for two columns of data each and we know that n is just the count of one array, and the result is in cell H1 (for no particular reason).

Now, it’s a fairly simple trick to calculate a t value based on the correlation coefficient. The formula is

and since we have just calculated r, it is simple to calculate t with the formula (and put the result in H3):

=H1*(sqrt(count(Array1)-1))/sqrt(1*-H1^2)

The last (and for now separate) step is to find the significance for this t score with the two-tailed t distribution function with n-2 degrees of freedom

=t.dist.2t(H3, count(Array1)-2)

And there we have it. So, it would be useful to have a little helper function we could apply simply, to calculate t from r. Here is the lambda function code:

=LAMBDA(r,n,(r*SQRT(n-2))/SQRT(1-(r^2)))

and we can name it and use it as before. I named mine ‘convertRtoT’ and used it like this with the correlation coefficient in H1 and n = 30:

convertRtoT(H1,30)

So now we know what the lambda() function is for. The example is perhaps a little obscure, but the principle – that half the struggle with digital knowledge is knowing what it’s for – holds for far more mundane cases: I’ve been learning Power BI, and while there are simple answers – power BI is for visualisation – it’s only after I’ve been through a few hours of tutorials that I’m really understanding what it’s about.

Homework

If you want to check out the example calculations, please go ahead. I checked them all in Excel (and for the t to r conversion, I checked my result against R), but it’s always possible to make a bluder when copying and pasting. But finding and fixing errors is good practice. If you want more practice, then I would suggest creating a function skewif() that works like kurtif(), taking two arrays ( a score and a selection criterion) a gives the skewness for the cases selected by the criterion. Good luck.

(this blog post was supported by the music of Iannis Xenakis, “Six Chansons No 1, ca sent le musc”)

Close

Close