Moodle-SITS Marks Transfer Pilot Update

By Kerry, on 9 February 2024

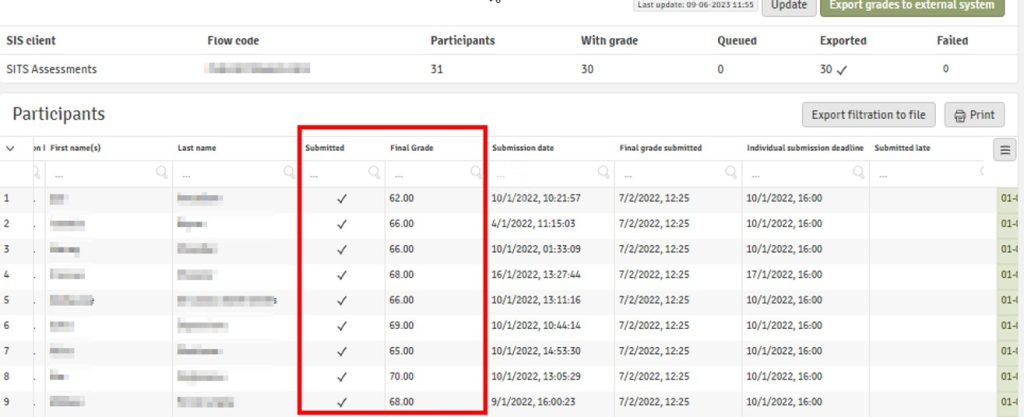

As some of you may be aware, a new Moodle integration is due to be released in the spring which has been designed and developed by the DLE Team to improve the process for transferring marks from Moodle to Portico. It is called the Moodle-SITS Marks Transfer Integration and we are currently trialing this with around 40 course administrators across the institution.

The pilot kicked off on 8 January and will run until 29 February 2024. The purpose of the pilot is to test the Moodle-SITS Marks Transfer Integration using the newly designed Marks Transfer Wizard and its marks transfer functionality that was developed following the Phase 1 Pilot, which took place with a very small group of course administrators at the end of last year. This wizard provides a more streamlined experience for end users by putting the core assessment component information at the centre of the tool which can then be mapped to a selection of Moodle assessments.

Pilot Phase 2 is the last pilot phase before an initial MVP (Minimal Viable Product) release into UCL Moodle Production in late March 2024. Currently, users can take advantage of the integration if the following criteria are met:

- They have used the Portico enrolment block to create a mapping with a Module Delivery on their Moodle course.

- Either of the following assessment scenarios is true:-

- Only one Moodle assessment activity is being linked to one assessment component in SITS.

- Only one Moodle assessment activity is being linked to multiple assessment components in SITS.

- An assessment component exists in SITS to map against.

- The Moodle assessment marks are numerical 0-100.

- The assessment component in SITS is compatible with SITS Marking Schemes and SITS Assessment Types.

- For exam assessments, the SITS assessment component is the exam room code EXAMMDLE.

The Marks Transfer Wizard currently supports the transfer of marks from one of the following summative assessment activities in Moodle:

- Moodle Assignment

- Moodle Quiz

- Turnitin Assignment (NOT multipart)

We intend to collect feedback on the new Marks Transfer Wizard from pilot participants to improve the interface and workflow for a general UCL-wide release in late March 2024 and also to prioritise next step improvements and developments following the launch.

So far informal feedback has been very positive: users say the assessment wizard works well and will save them a lot of time. The pilot has also been useful for exploring where issues might arise with Portico records or Moodle course administration as well as for gathering frequently asked questions and advice on best practice which will feed into our guidance for wider rollout.

Close

Close