Randomising Questions and Variables with Moodle Quiz

By Eliot Hoving, on 8 December 2020

One of the strengths of Moodle Quizzes is the ability to randomise questions. This feature can help deter student collusion.

There are several ways to randomise questions in a Moodle Quiz, which can be combined or used separately. Simple examples are provided here but more complex questions and variables can be created.

Randomising the Question response options

It’s possible to shuffle the response options within many question types, including Multiple Choice Questions and Matching Question. When setting up a Quiz, simply look under Question behaviour, and change Shuffle within questions to Yes.

Randomising the order of Questions

You can also randomise the order of questions in a Quiz. Click Edit Quiz Questions in your Quiz, click the Shuffle tick box at the top of the page. Questions will now appear in a random order for each student.

Randomising the Questions

It’s possible to add random questions from pre-defined question Categories. Think of Categories as containers of Quiz questions. They can be based on topic area, e.g. ‘Dosage’, ‘Pharmokinetics‘, ‘Pharmacology’, ‘Patient Consultation’ or they can be based on questions for a specific assessment e.g. ‘Exam questions container 1’, ‘Exam questions container 2’, ‘Exam questions container 3’.

The first step is to create your Categories.

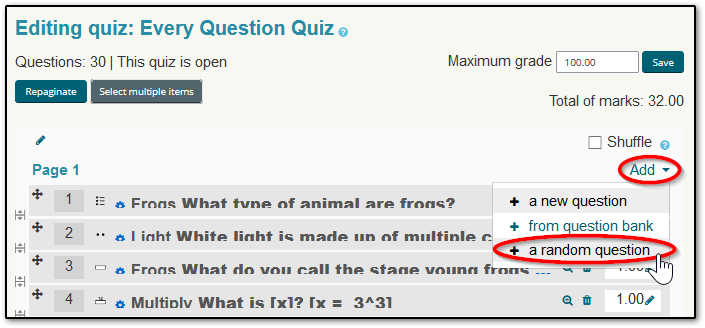

Then when adding questions to your Quiz, select add a random question and choose the Category. You can also choose how many random questions to add from the Category.

For example, if you had a quiz of 10 questions, and you want to give students a random question out of 3 options for each question, you would need 10 Categories, each holding 3 questions e.g. ‘Exam Q1 container’, ‘Exam Q2 container’ … ‘Exam Q10 container’.

Alternatively, if you want a quiz with 10 questions from ‘Pharmokinetics‘, and 10 from ‘Pharmacology’ you could create the two Categories with their questions, then go to your Quiz and add a random question, select the ‘Pharmokinetics‘ Category, and choose 10 questions. Repeat for ‘Pharmacology’. You now have a 20 question quiz made up of 50% Pharmokinetics and Pharmacology questions.

After saving a random question/s you can add further random questions or add regular questions that will appear for all students. Simply add a question from the question bank as normal.

Be aware, that randomising questions will reduce the reliability of your Moodle Quiz statistics. For example the discrimination index will be calculated on the Quiz question overall, e.g. Q2, not on each variation of the question that may have been randomly selected from, i.e. all the questions from the Exam Q2 container. Each question variation will have fewer attempts compared to if the question was given to all students, so any analytics based on these fewer attempts will be less accurate.

Randomising variables within Questions:

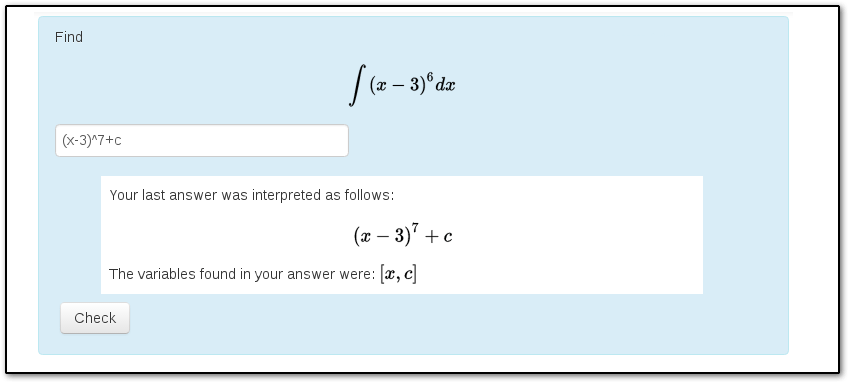

In addition to randomising questions, certain question types can have randomised variables within them.

The STACK question type supports complex mathematical questions, which can include random variables. For example you could set some variables, a and b, as follows:

a = rand(6) (where rand(6) takes a random value from the list [0,1,2,3,4,5,6]).

b = rand(2) (where rand(2) takes some random value from the list [0,1,2]).

Variables can then be used within questions, so students could be asked to integrate a×xb which thanks to my random variables will generate 21 different questions for my students e.g. integrate 0×x0, 0×x, 0×x2, x0, x, x2, 2x0, 2x, 2x2 … 5x0, 5x, 5x2, 6x0, 6x, 6x2.

Random variants can be generated, tested, and excluded if they are inappropriate, in the above case I might exclude a = 0 as the question equation would evaluate to 0, whereas I want students to integrate a non-zero algebraic expression.

The Calculated question type also supports randomising variables as well as basic calculation questions for maths and scientific assessment. Calculated questions can be free entry or multiple choice. For example you could ask students to calculate the area of a rectangle. The width and height would be set to wild card values, let’s call them

{w} for width, and

{h} for height.

The answer is always width × height or {w} × {h} regardless of the values of {w} and {h}. Moodle calls this the answer formula.

The tutor then sets the possible values of {w} and {h} for the student by creating a dataset of possible values for Moodle to randomly select from. To create your dataset, you first define your wild card values e.g. {w} will take some value between 1 and 10, and {h} to take some value from 10 to 20. You can then ask Moodle to generate sets of your variables, e.g. 10, 50, or 100 possible combinations of {w} and {h} based on the conditions you define. For example, given the conditions above, I could generate the following 3 sets:

Set 1: {w} = 1, {h} = 14

Set 2: {w} = 6.2, {h} = 19.3

Set 3: {w} = 9.1, {h} = 11

Creating a dataset can be somewhat confusing, so make sure you leave enough time to read the Calculated question type documentation and test it out. Once complete, Moodle can now provide students with potentially 100s of random values of {w} and {h} based on your dataset. Using the answer formula, you provide, Moodle can evaluate the student’s response and automatically grade the student’s answer regardless of what random variables they are given.

Try a Randomised Quiz

To learn more, take an example randomised quiz on the Marvellous Moodle Examples course.

Speak to a Learning Technologist for further support

Contact Digital Education at digi-ed@ucl.ac.uk for advice and further support.

Close

Close