How do headteachers in England use test data, and does this differ from other countries?

By Blog Editor, IOE Digital, on 15 October 2019

In England we are fortunate to have a lot of data available about school pupils and how they are achieving academically at school.

Organisations such as FFT aim to make this data available and easily digestible to schools through services such as Aspire so that it can be used to inform the decisions of teachers and school leaders.

But how does the way schools in England make use of data compare to schools in other countries?

A lot of use for relatively little input

As part of the PISA 2015 questionnaire, headteachers were asked how frequently they used data from standardised tests to set their school’s educational goals. Headteachers were also asked how many times a year pupils were tested.

The chart below shows the percentage of heads who said they used data from standardised tests to set goals at least once a week plotted against the percentage who said pupils were tested three or more times a year.

There are two key points to take from this chart. First, data is used a lot by school leaders in England and Wales to set goals.

In England, almost 40% of heads say they do this every week; this is in comparison to less than 10% of school leaders in most other OECD countries. Consequently, England and Wales are clear outliers along the vertical axis in the chart.

Second, there is little evidence that secondary school pupils are tested more in England than in other countries. Only around 10% of headteachers in England reported that Year 11 pupils were assessed using standardised test three times a year or more, which is around the OECD average (and well below some other countries).

Together, the chart suggests that school leaders get a lot of use out of the educational data that is collected, while also not subjecting pupils to endless testing throughout the academic year.

What do schools use educational data for?

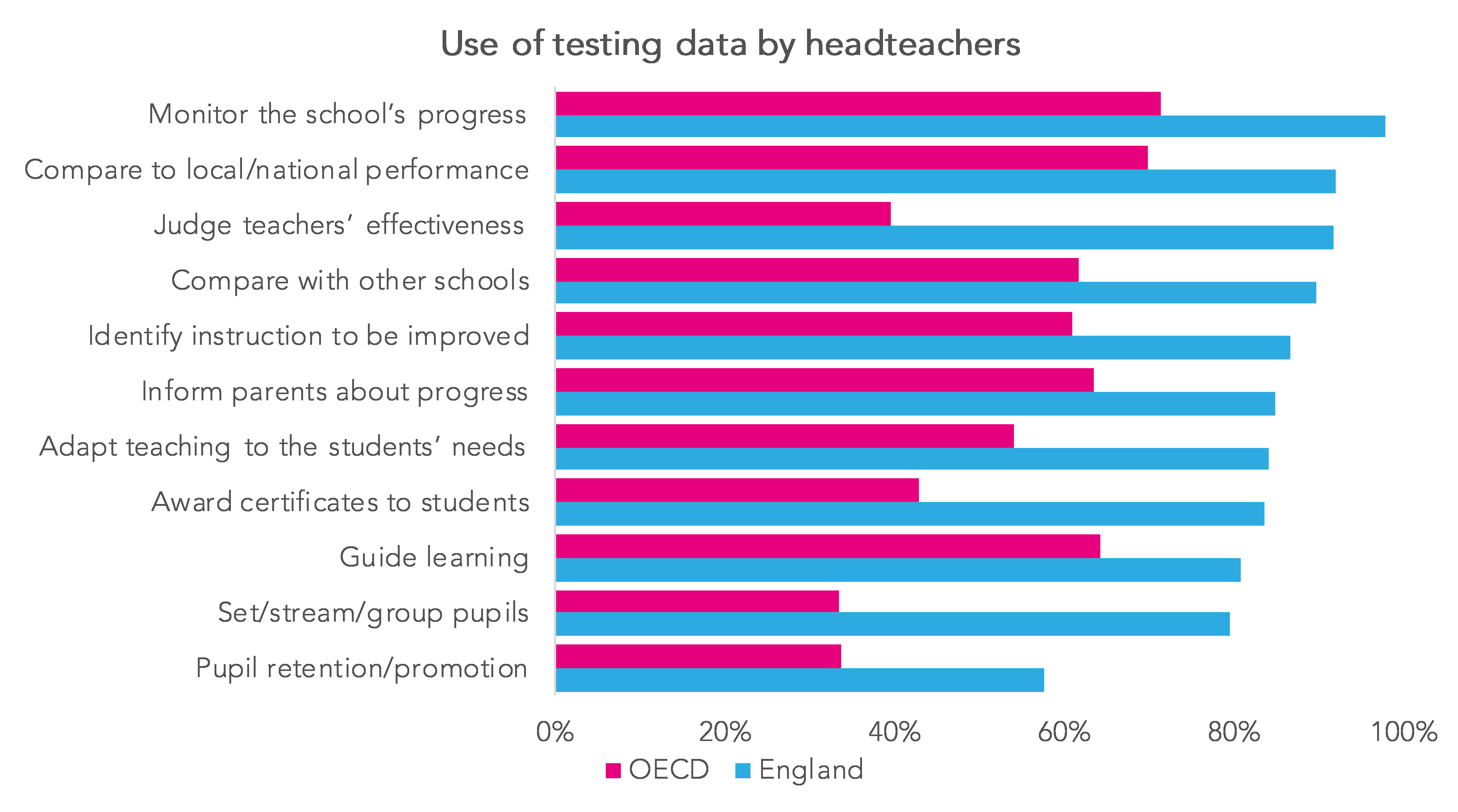

In a separate question, headteachers were also asked a series of questions about how examination (or other standardised testing) data is actually used. These results are presented in the chart below.

This graph again illustrates the key point that schools in England make extensive use of the education data they hold. For each category England is above the OECD average. There are, however, a few particular points of note.

The first is that educational data is used to make important decisions about how instruction is provided within the school. For instance, 87% of schools in England use educational data to identify how instruction could be improved (compared to the OECD average of 61%), 84% to adapt teaching to pupils’ needs (OECD average, 54%) and 81% to guide pupils’ learning (OECD average, 64%).

The second area is benchmarking schools’ performance. Over 90% of schools in England use educational data to monitor school progress, and compare their performance to other schools and to local/national benchmarks. Again, this is well above the level observed in most other developed countries.

Finally, data also plays a key role in helping shape the organisation of schools and providing feedback to key stakeholders such as teachers and parents. This includes the setting or streaming of pupils, informing parents about pupil progress and its use in making judgements about teacher effectiveness.

Lessons for other countries?

Schools in England make extensive use of education data – much more so than other education systems across the world. It plays a key role in many important decisions, particularly with respect to how instruction could be improved and the setting of key educational goals. At the same time, the amount of testing conducted does not seem to be excessive, at least compared to some other education systems across the world.

Education in England therefore seems to be very data informed. This is likely due to the ease and accessibility of the information that they are provided with. Other countries may therefore look to the experience of England if they want to encourage schools to make more data informed decisions.

Other posts in this series can be found here, here, here and here.

8 Responses to “How do headteachers in England use test data, and does this differ from other countries?”

- 1

-

2

Are all types of reading equal, or are some more equal than others? | IOE LONDON BLOG wrote on 22 October 2019:

[…] Other posts in this series can be found here, here, here, here and here. […]

-

3

How do GCSE grades relate to PISA scores? | IOE LONDON BLOG wrote on 29 October 2019:

[…] Other posts in this series can be found here, here, here, here, here and here. […]

-

4

Is PISA ‘fundamentally flawed’ because of the scaling methodology used? | IOE LONDON BLOG wrote on 5 November 2019:

[…] Other posts in this series can be found here, here, here, here, here, here and here. […]

-

5

Should we eat more fish or more ice-cream to boost PISA scores? | IOE LONDON BLOG wrote on 12 November 2019:

[…] Other posts in this series can be found here, here, here, here, here, here, here and here. […]

-

6

Is Canada really an education ‘superpower’? The evidence is not as clear-cut as you might think | IOE LONDON BLOG wrote on 19 November 2019:

[…] Other posts in this series can be found here, here, here, here, here, here, here, here and here. […]

-

7

Should England continue participating in PISA? | IOE LONDON BLOG wrote on 26 November 2019:

[…] Other posts in this series can be found here, here, here, here, here, here, here, here, here and here. […]

-

8

Five things to remember when the PISA 2018 results are released | IOE LONDON BLOG wrote on 2 December 2019:

[…] Other posts in this series can be found here, here, here, here, here, here, here, here, here, here and here. […]

Close

Close

But using data a lot only makes sense if that data is soundly based

That obtained from high stakes statutory testing isn’t compared to low stakes testing (eg CATs) and formative assessment