Understanding and Navigating the Risks of AI – By Reuben Adams

By sharon.betts, on 19 October 2023

It is undeniable at this point that AI is going to radically shape our future. After decades of effort, the field has finally developed techniques that can be used to create systems robust enough to survive the rough and tumble of the real world. As academics we are often driven by curiosity, yet rather quickly the curiosities we are studying and creating have the potential for tremendous real-world impact.

It is becoming ever more important to keep an eye on the consequences of our research, and to try to anticipate potential risks.

This has been the purpose of our AI discussion series that I have organised for the members of the AI Centre, especially for those on our Foundational AI CDT.

I kicked off the discussion series with a talk outlining the ongoing debate over whether there is an existential risk from AI “going rogue,” as Yoshua Bengio has put it. By this I mean a risk of humanity as a whole losing control over powerful AI systems. While this sounds like science fiction at first blush, it is fair to say that this debate is far from settled in the AI research community. There are very strong feelings on both sides, and if we are to cooperate as a community in mitigating risks from AI, it is urgent that we form a consensus on what these risks are. By presenting the arguments from both sides in a neutral way, I hope I have done a small amount to help those on both ends of the spectrum understand each other. You can watch my talk here: https://www.youtube.com/watch?v=PI9OXHPyN8M

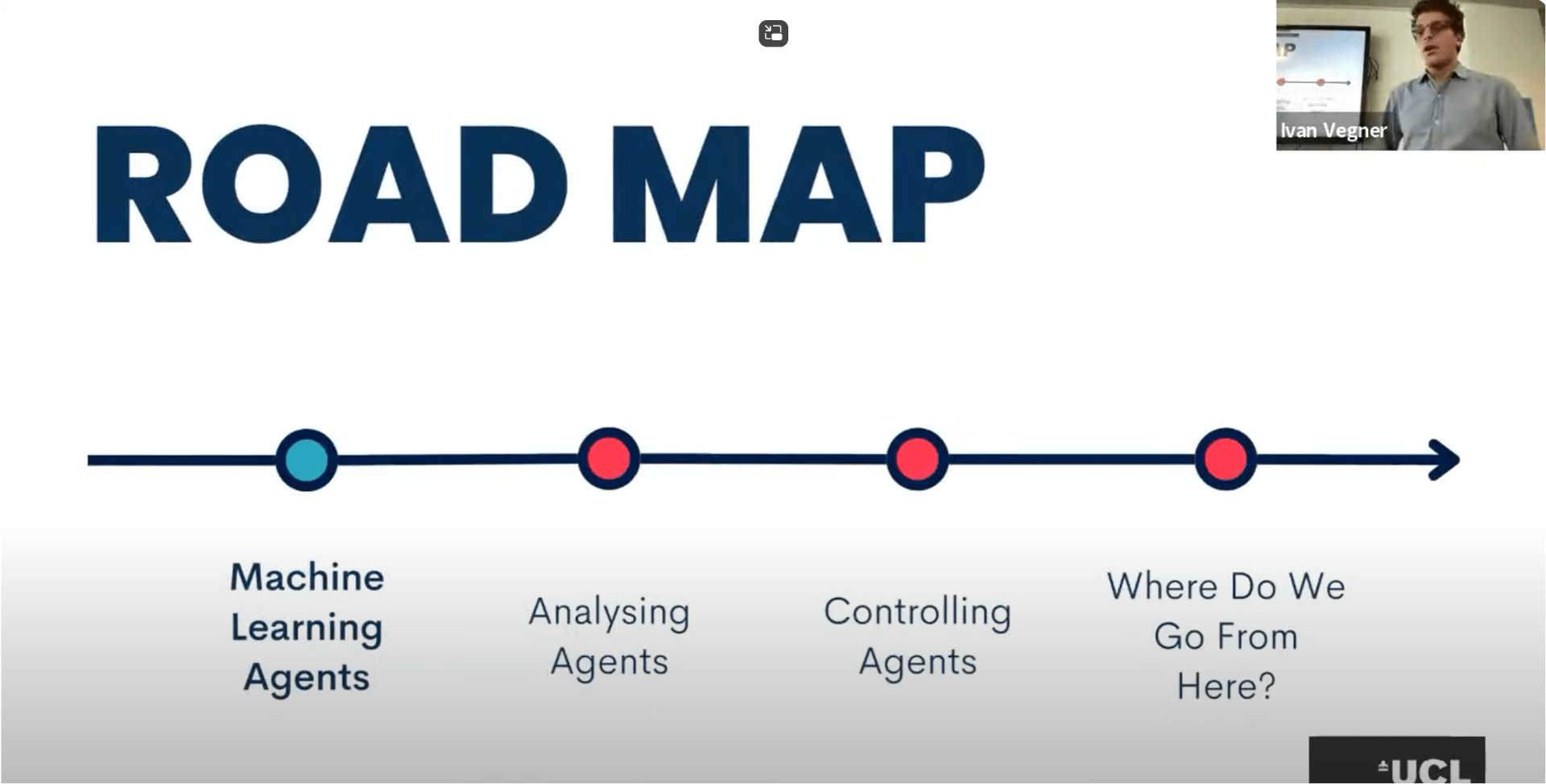

Ivan Vegner, PhD student in NLP at the University of Edinburgh, was kind enough to travel down for our second talk, on properties of agents in general, both biological and artificial. He argued that sufficiently agentic AI systems, if created, would pose serious risks to humanity, because they may pursue sub-goals such as seeking power and influence or increasing their resistance to being switched off—after all, almost any goal is easier to pursue if you have power and cannot be switched off! Stuart Russell pithily puts this as “You can’t fetch the coffee if you’re dead.” Ivan is an incredibly lucid speaker. You can see his talk Human-like in Every way? here https://www.youtube.com/watch?v=LGeOMA25Xvc

For some, a crux in this existential risk question might be whether AI systems will think like us, or in some alien way. Perhaps we can more easily keep AI systems under control if we can create them in our own image? Or could this backfire—could we end up with systems that have the understanding to deceive or manipulate? Professors Chris Watkins and Nello Christianini dug into this question for us by debating the motion “We can expect machines to eventually think in a human-like way.” (Chris for, Nello against). There were many, many questions afterwards, and Chris and Nello very kindly stayed around to continue the conversation. Watch the debate here: https://www.youtube.com/watch?v=zWCUHmIdWhE

Separate from all of this is the question of misuse. Many technologies are dual-use, but their downsides can be successfully limited through regulation. With AI it is different: the scale can be enormous and rapidly increased (often the bottleneck is simply buying/renting more GPUs), there is a culture of immediately open-sourcing software so that anyone can use it, and AI models often require very little expertise to run or adapt to new use-cases. Professor Mirco Musolesi outlined a number of risks he perceives from using AI systems to autonomously make decisions in economics, geo-politics, and warfare. His talk was incredibly thought-provoking: You can see his talk here: https://youtu.be/QH9eYPglgt8

This series has helped foster an ongoing conversation in the AI Centre on the risks of AI and how we can potentially steer around them. Suffice to say it is a minefield.

We should certainly not forget the incredible potential of AI to have a positive impact on society, from automated and personalised medicine, to the acceleration of scientific and technological advancements aimed at mitigating climate change. But there is no shortage of perceived risks, and currently a disconcerting lack of technical and political strategies to deal with them. Many of us at the AI Centre are deeply worried about where we are going. Many of us are optimists. We need to keep talking and increase our common ground.

We’re racing into the future. Let’s hope we get what AI has been promising society for decades. Let’s try and steer ourselves along the way.

Reuben Adams is a final year PhD student in the UKRI CDT in Foundational AI.

Close

Close