Simultaneous Localisation and Mapping using sensors

By Sharon C Betts, on 9 December 2021

By Jingwen Wang – PhD Candidate Cohort 1

Simultaneous Localisation and Mapping is the process of reconstructing the surrounding environment using a sensor (camera, LiDAR, radar, etc) and estimating the ego-motion of the sensor at the same time. It is widely used in many applications such as augmented reality (AR), autonomous driving and robot navigation.

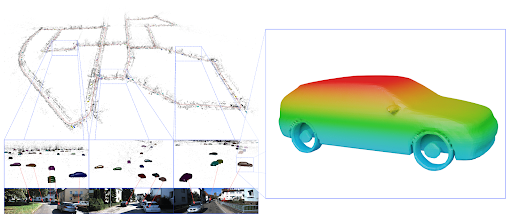

Traditional SLAM algorithms could build very high-quality geometric maps of the room-scale and street-scale environments with accurate camera trajectory estimation of less than 1% error drift. However, a purely geometric map is not enough for many applications. To enable more advanced interaction, we need semantic level and object level understanding of the scene. That’s why we want to build a SLAM system that is able to build a map of 3D objects.

Example of dense (left) and sparse (right) map reconstructed from ElasticFusion and stereo-DSO

Prior art along the direction of object-level SLAM have several limitations. They either 1. require a pre-scanned CAD model database, thus cannot generalize to previously unseen objects, or 2. perform online dense surface reconstruction resulting in incomplete partial reconstruction, or 3. model objects using simple geometric shapes and sacrifice the level of details. So the question is can we achieve these three goals at the same time?

Issues with prior art

In DSP-SLAM, we solve this problem by leveraging shape prior pre-trained from a large dataset of known shapes within a category, and formulate the object reconstruction as an iterative optimization problem: given an initial coarse estimate of the shape code and object pose, we can iteratively refine the shape and pose such that they fit our current observation the best.

We solve the optimization using Gauss-Newton method with analytical Jacobians to speed up the process, so that it can be extended to a full object SLAM system. We take advantage of multi-view observations iteratively refining the object poses and maintaining a globally consistent joint map of objects and points.

DSP-SLAM teaser

project page: https://jingwenwang95.github.io/dsp-slam/

Close

Close