End of term social

By sharon.betts, on 14 December 2023

It is hard to believe that we are already reaching the end of another term at UCL. Since October we have welcomed 13 new students had PhD submissions, congratulated new Drs and seen a significant number of students have their work accepted for some of the most prestigious conferences in the field of artificial intelligence and machine learning!

Before the CDT breaks up for the year, we felt it was only fitting to have our last student catch up session be one that taxed their minds and put their cohort collaboration skills to the test via an Escape Room journey!

We had 18 students divided into 4 teams to try and solve puzzles galore to escape in good time to eat mince pies and share their recent work and future plans.

We wish all students, staff and families happy holidays and the best for a successful 2024.

Demis Hassabis talk at UCL

By sharon.betts, on 8 December 2023

On Wednesday 29th November, UCL Events hosted Demis Hassabis to give the UCL Prize Lecture 2023 on his work at Google DeepMind, a company that he founded after completing his PhD at UCL.

Demis’ talk covered his journey through academia and interest in machine learning and artificial intelligence, which all started with a childhood love of games. Having started playing chess as young as 3, it is little wonder that this incredibly insightful and intelligent individual went on to work with algorithms and formats that were fun, functional and ground breaking. Demis’ interest in neuroscience and computational analysis was the perfect groundwork from which to create machine learning tools that now lead the world in their outcomes and developments. From AlphaGo to protein folding and beyond, Demis is a pioneer and revolutionary wrapped up in an extremely humble and engaging human being.

Our CDT is privileged to have a number of students funded by Google DeepMind, and these scholars were invited to attend a VIP meet and greet with Demis and his colleagues before the lecture began.

- Jakob Zeitler, Changmin Yu, Sicelukwanda Zwane, Mirgahney Mohamed, Varsha Ramineni

- Changmin Yu, Prof Marc Deisenroth, Jakob Zeitler

Our students were able to share their accomplishments and research with a number of academics, Google DeepMind executives and other invitees and were delighted to have been included in such a prestigious event.

There were over 900 people in personal attendance for the talk, with over 400 additional attendees online. With special thanks to the UCL OVPA team and UCL Events for making this happen and sharing the opportunity with our scholars.

The FAICDT Visit UCL East!

By sharon.betts, on 27 November 2023

On Wednesday 15th November, members of all cohorts within the FAICDT visited our Robotics labs at the newly opened UCL East Campus. Prof Dimitrios Kanoulas very kindly offered up his time and expertise in showing our students around both the Marshgate and Pool Street Labs.

Our students were able to interact with the quadra-ped robots and speak with academics and other researchers about their ground breaking work in the field of AI and robotics.

The visit to UCL East’s robotics lab was impressive. We saw the Boston Dynamics robot navigate stairs and avoid obstacles, showcasing the practical applications of these technologies. The most striking moment was seeing a robot execute a backflip, which highlighted the advanced capabilities in robotics. Dimitrios Kanoulas kindly also gave us a tour around the Marshgate building! The location’s calm park setting was a nice change from the main campus environment. – Sierra Bonilla, Cohort 5

A great time was had by all, and we are delighted that our students have been able to connect with other researchers in the AI field here at UCL.

The UKRI Inter CDT Conference 30-31 October 2023

By sharon.betts, on 22 November 2023

For the second year running, the UKRI CDT in Foundational AI, collaborated with the University of Bath and the University of Bristol to organise an inter CDT conference at the Bristol Hotel.

This year our CDT put on a session on AI Vision, organised with Prof Lourdes de Agapito, and a student-led session on the Threat of AI, organised with Reuben Adams and Robert Kirk.

The event began with a welcome to all from our FAICDT Deputy Director Gabriel Brostow, followed on by a Keynote Speech by Jasmine Grimsley and Sarah-Jane Smyth from the London Data Company.

The AI Vision session opened up the individual sessions conference on day one, and we were delighted to host three eminent academics to discuss their work and research. First we had Christian Rupprecht from the University of Oxford discussing ‘Unsupervised Computer Vision in the Time of Large Models’. He was followed on by Laura Sevilla-Lara from the University of Edinburgh, who discussed her work on ‘Efficient Video Understanding’ and the session finished with Edward Johns from Imperial College London, discussing his research on ‘Vision-Based Robot Learning of Everyday Tasks’. The room was filled with an eager audience who asked multiple questions of each speaker, with a real interest and excitement on the work that was being undertaken by all in this field.

- Christian Rupprecht

- Laura Sevilla-Lara

- Edward Johns

- Audience at AI Vision Session

The rest of the first day saw sessions from the other two CDTs involved, with an agenda for the event available here: UKRI Inter AI CDT Conference 2023 – ART-AI (cdt-art-ai.ac.uk)

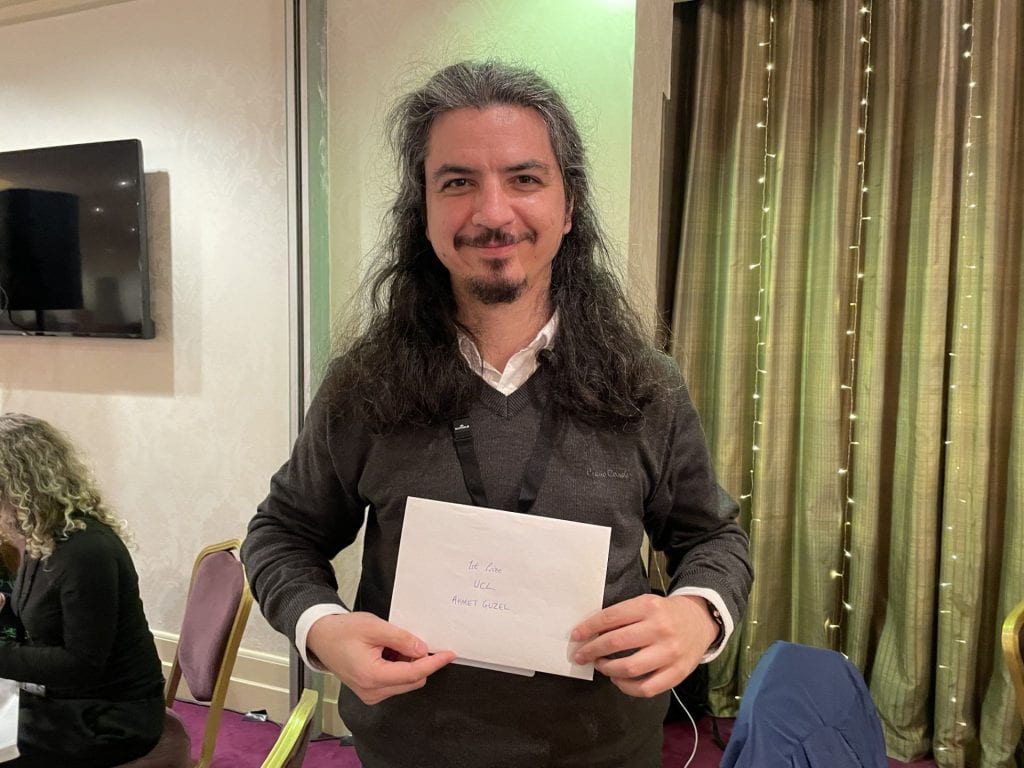

As with our first conference, there was a popular poster session held at the MShed in the afternoon of Day 1, with prizes handed out at our evening dinner. Our new, cohort 5, student Ahmet Guzel was the winner from the FAICDT with a poster that won outstanding marks from all judges on the day.

Day two started with a keynote speech from our final year student Jakob Zeitler, who is currently on interruption whilst working on his start up company Matterhorn Studios. Jakob gave an insightful talk on ‘Machine Learning for Material Science: Using Bayesian Optimisation to Create a Sustainable Materials Future’. We were delighted to have Jakob provide a keynote here and it was wonderful to see so many individuals reach out to him after his talk to find out more about his research and work at Matterhorn.

The afternoon session by the FAICDT was led by students Reuben Adams and Robert Kirk. This was a vibrant session, asking attendees to think about where they currently sat on the spectrum of concern with regards to the safety of AI and then have in depth discussions with one another about possible AI solutions and potential regulations and possibilities for future safety. The event was interactive, and highly engaging!

- Robert Kirk and Reuben Adams

- Interactive Audience on the Safety Spectrum

The event closed with a final keynote by Steven Schokaert from Cardiff University with a networking session for all students before returning to their home institutions.

All in all, another successful event! With thanks to Brent Kiernan, Christina Squire and Suzanne Binding for being co-organisers extraordinaire!

Understanding and Navigating the Risks of AI – By Reuben Adams

By sharon.betts, on 19 October 2023

It is undeniable at this point that AI is going to radically shape our future. After decades of effort, the field has finally developed techniques that can be used to create systems robust enough to survive the rough and tumble of the real world. As academics we are often driven by curiosity, yet rather quickly the curiosities we are studying and creating have the potential for tremendous real-world impact.

It is becoming ever more important to keep an eye on the consequences of our research, and to try to anticipate potential risks.

This has been the purpose of our AI discussion series that I have organised for the members of the AI Centre, especially for those on our Foundational AI CDT.

I kicked off the discussion series with a talk outlining the ongoing debate over whether there is an existential risk from AI “going rogue,” as Yoshua Bengio has put it. By this I mean a risk of humanity as a whole losing control over powerful AI systems. While this sounds like science fiction at first blush, it is fair to say that this debate is far from settled in the AI research community. There are very strong feelings on both sides, and if we are to cooperate as a community in mitigating risks from AI, it is urgent that we form a consensus on what these risks are. By presenting the arguments from both sides in a neutral way, I hope I have done a small amount to help those on both ends of the spectrum understand each other. You can watch my talk here: https://www.youtube.com/watch?v=PI9OXHPyN8M

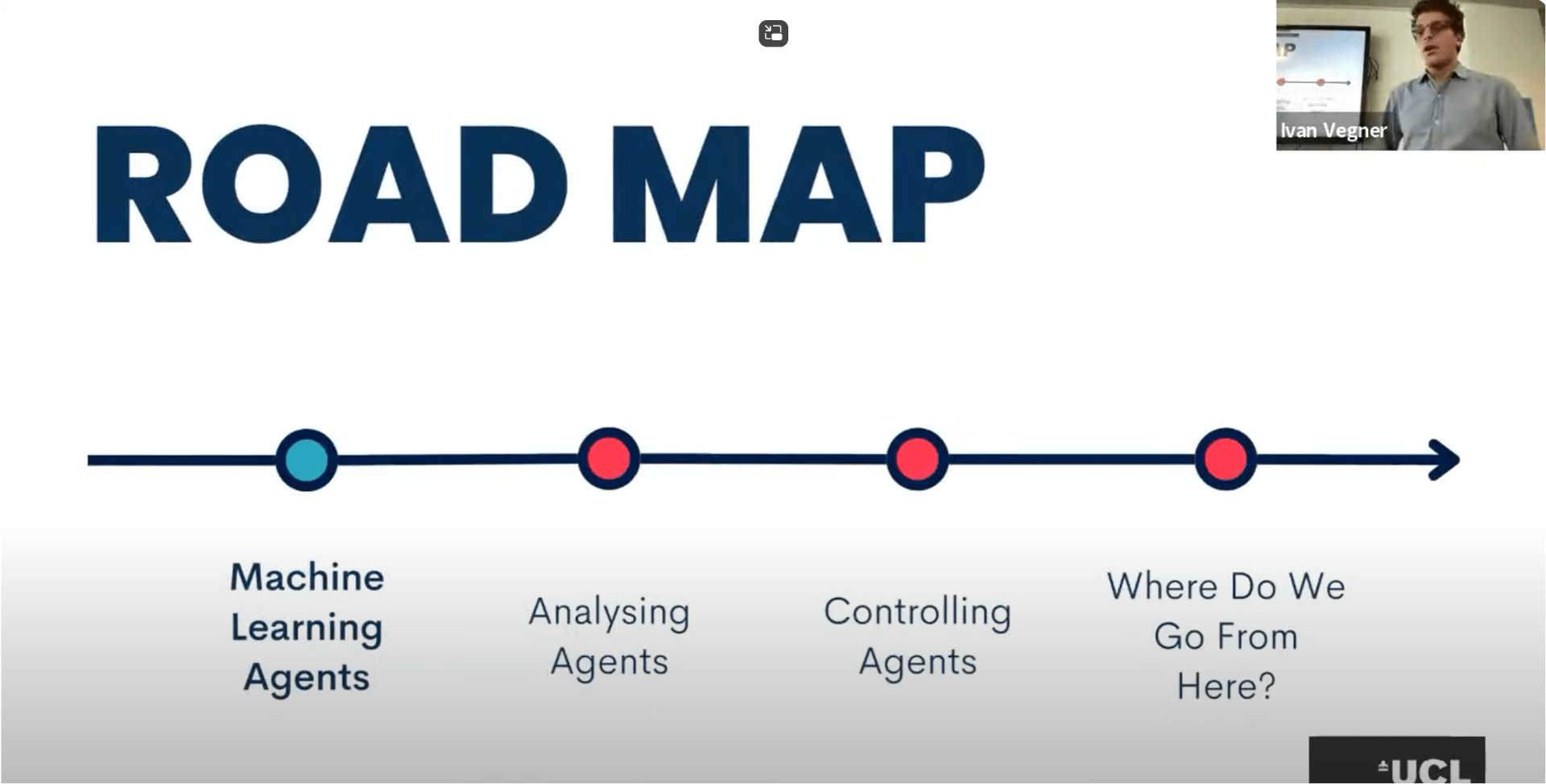

Ivan Vegner, PhD student in NLP at the University of Edinburgh, was kind enough to travel down for our second talk, on properties of agents in general, both biological and artificial. He argued that sufficiently agentic AI systems, if created, would pose serious risks to humanity, because they may pursue sub-goals such as seeking power and influence or increasing their resistance to being switched off—after all, almost any goal is easier to pursue if you have power and cannot be switched off! Stuart Russell pithily puts this as “You can’t fetch the coffee if you’re dead.” Ivan is an incredibly lucid speaker. You can see his talk Human-like in Every way? here https://www.youtube.com/watch?v=LGeOMA25Xvc

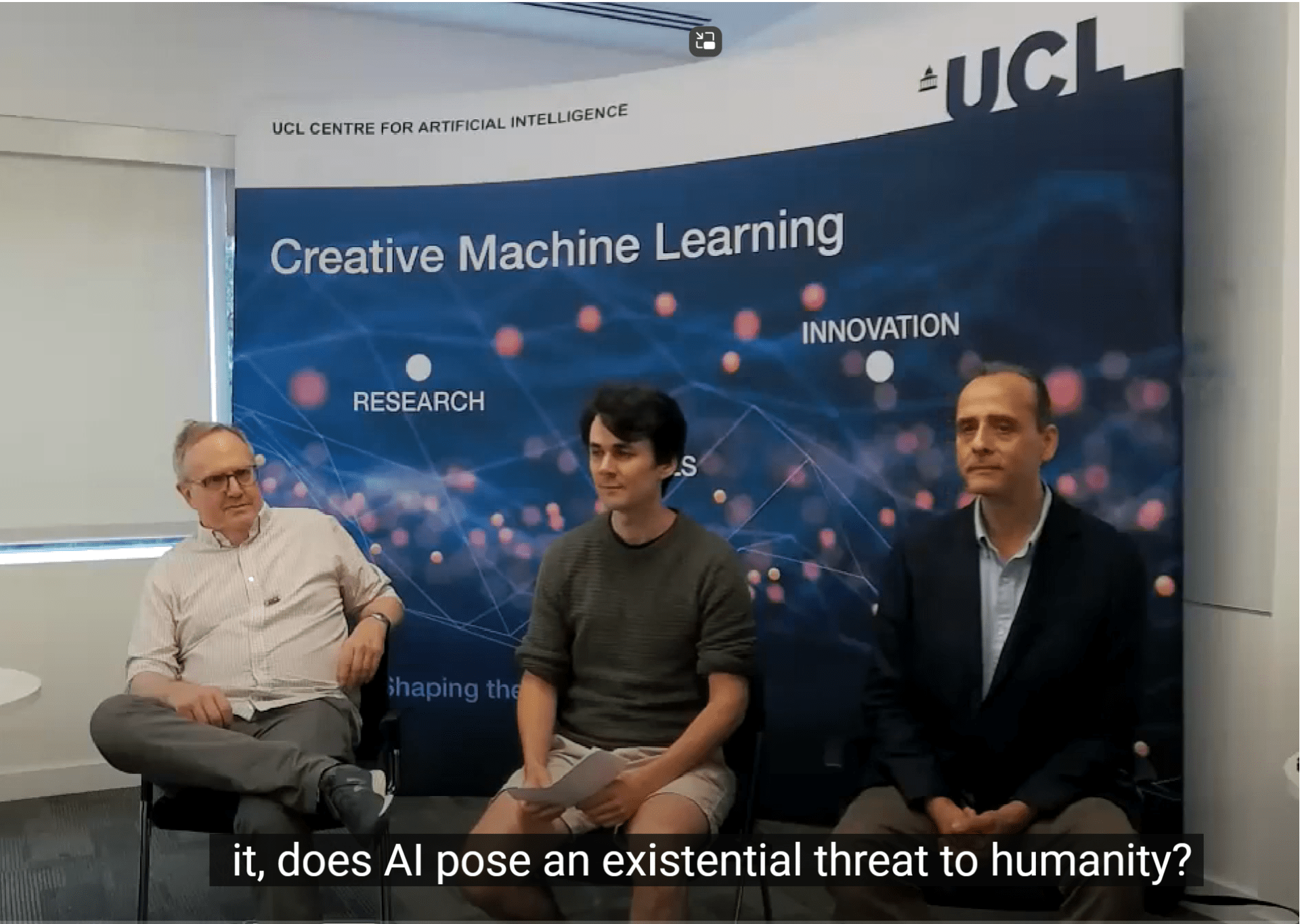

For some, a crux in this existential risk question might be whether AI systems will think like us, or in some alien way. Perhaps we can more easily keep AI systems under control if we can create them in our own image? Or could this backfire—could we end up with systems that have the understanding to deceive or manipulate? Professors Chris Watkins and Nello Christianini dug into this question for us by debating the motion “We can expect machines to eventually think in a human-like way.” (Chris for, Nello against). There were many, many questions afterwards, and Chris and Nello very kindly stayed around to continue the conversation. Watch the debate here: https://www.youtube.com/watch?v=zWCUHmIdWhE

Separate from all of this is the question of misuse. Many technologies are dual-use, but their downsides can be successfully limited through regulation. With AI it is different: the scale can be enormous and rapidly increased (often the bottleneck is simply buying/renting more GPUs), there is a culture of immediately open-sourcing software so that anyone can use it, and AI models often require very little expertise to run or adapt to new use-cases. Professor Mirco Musolesi outlined a number of risks he perceives from using AI systems to autonomously make decisions in economics, geo-politics, and warfare. His talk was incredibly thought-provoking: You can see his talk here: https://youtu.be/QH9eYPglgt8

This series has helped foster an ongoing conversation in the AI Centre on the risks of AI and how we can potentially steer around them. Suffice to say it is a minefield.

We should certainly not forget the incredible potential of AI to have a positive impact on society, from automated and personalised medicine, to the acceleration of scientific and technological advancements aimed at mitigating climate change. But there is no shortage of perceived risks, and currently a disconcerting lack of technical and political strategies to deal with them. Many of us at the AI Centre are deeply worried about where we are going. Many of us are optimists. We need to keep talking and increase our common ground.

We’re racing into the future. Let’s hope we get what AI has been promising society for decades. Let’s try and steer ourselves along the way.

Reuben Adams is a final year PhD student in the UKRI CDT in Foundational AI.

Student presentation – Alex Hawkins Hooker at ISMB

By sharon.betts, on 4 October 2023

“Safe Trajectory Sampling in Model-based Reinforcement Learning for Robotic Systems” By Sicelukwanda Zwane

By sharon.betts, on 29 September 2023

In the exciting realm of Model-based Reinforcement Learning (MBRL), researchers are constantly pushing the boundaries of what robots can learn to achieve when given access to an internal model of the environment. One key challenge in this field is ensuring that robots can perform tasks safely and reliably, especially in situations where they lack prior data or knowledge about the environment. That’s where the work of Sicelukwanda Zwane comes into play.

Background

In MBRL, robots use small sets of data to learn a dynamics model. This model is like a crystal ball that predicts how the system will respond to a given sequence of different actions. With MBRL, we can train policies from simulated trajectories sampled from the dynamics model instead of first generating them by executing each action on the actual system, a process that can take extremely long periods of time on a physical robot and possibly cause wear and tear.

One of the tools often used in MBRL is the Gaussian process (GP) dynamics model. GPs are fully-Bayesian models that not only model the system but also account for the uncertainty in state observations. Additionally, they are flexible and are able to learn without making strong assumptions about the underlying system dynamics [1].

The Challenge of Learning Safely

When we train robots to perform tasks, it’s not enough to just predict what will happen; we need to do it safely. As with most model classes in MBRL, GPs don’t naturally incorporate safety constraints. This means that they may produce unsafe or unfeasible trajectories. This is particularly true during early stages of learning, when the model hasn’t seen much data, it can produce unsafe and seemingly random trajectories.

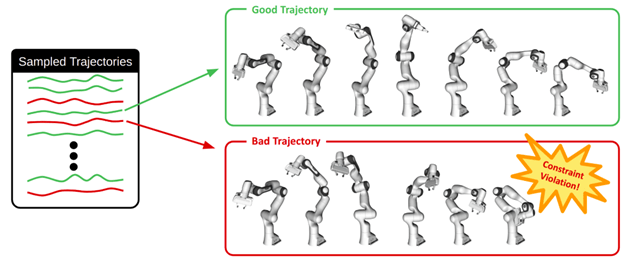

For a 7 degree of freedom (DOF) manipulator robot, bad trajectories may contain self-collisions.

Distributional Trajectory Sampling

In standard GP dynamics models, the posterior is represented in distributional form – using its parameters, the mean vector and covariance matrix. In this form, it is difficult to reason about

about the safety of entire trajectories. This is because trajectories are generated through iterative random sampling. Furthermore, this kind of trajectory sampling is limited to cases where the intermediate state marginal distributions are Gaussian distributed.

Pathwise Trajectory Sampling

Zwane uses an innovative alternative called “pathwise sampling” [3]. This approach draws samples from GP posteriors using an efficient method called Matheron’s rule. The result is a set of smooth, deterministic trajectories that aren’t confined to Gaussian distributions and are temporally correlated.

Adding Safety

The beauty of pathwise sampling [3] is that it has a particle representation of the GP posterior, where individual trajectories are smooth, differentiable, and deterministic functions. This allows for the isolation of constraint-violating trajectories from safe ones. For safety, rejection sampling is performed on trajectories that violate safety constraints, leaving behind only the safe ones to train the policy. Additionally, soft constraint penalty terms are added to the reward function.

Sim-Real Robot Experiments

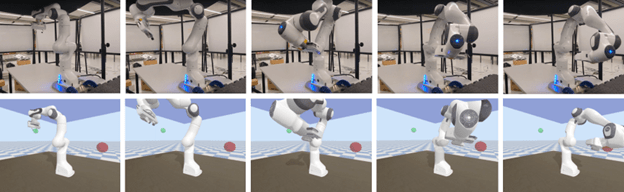

To put this approach to the test, Zwane conducted experiments involving a 7-DoF robot arm in a simulated constrained reaching task, where the robot has to avoid colliding with a low ceiling. The method successfully learned a reaching policy that adhered to safety constraints, even when starting from random initial states.

In this constrained manipulation task, the robot is able to reach the goal (shown by the red sphere – bottom row) without colliding with the ceiling (blue – bottom row) using less than 100 seconds of data in simulation.

Summary

Sicelukwanda Zwane’s research makes incremental advances on the safety of simulated trajectories by incorporating safety constraints while keeping the benefits of using fully-Bayesian dynamics models such as GPs. This method promises to take MBRL out of simulated environments and make it more applicable to real-world settings. If you’re interested in this work, we invite you to dive into the full paper, published at the recent IEEE CASE 2023 conference.

References

- M. P. Deisenroth and C. E. Rasmussen. PILCO: A Model-based and Data-efficient Approach to Policy Search. ICML, 2011.

- S. Kamthe and M. P. Deisenroth. Data-Efficient Reinforcement Learning with Probabilistic Model Predictive Control. AISTATS, 2018.

- J. T. Wilson, V. Borovitskiy, A. Terenin, P. Mostowsky, and M. P. Deisenroth. Pathwise Conditioning of Gaussian Processes. JMLR, 2021.

Student-Led Workshop – Distance-based Methods in Machine Learning – Review by Masha Naslidnyk

By sharon.betts, on 3 July 2023

We are delighted to announce the successful conclusion of our recent workshop on Distance-based Methods in Machine Learning. Held at the historical Bentham House on 27-28th of June, the event brought together approximately 60 delegates, including leading experts and researchers from statistics and machine learning.The workshop showcased a diverse range of speakers who shared their knowledge and insights on the theory and methodology behind machine learning approaches utilising kernel-based and Wasserstein distances. Topics covered included parameter estimation, generalised Bayes, hypothesis testing, optimal transport, optimization, and more.The interactive sessions and engaging discussions created a vibrant learning environment, fostering networking opportunities and collaborations among participants. We extend our gratitude to the organising committee, speakers, and attendees for their valuable contributions to this successful event. Stay tuned for future updates on similar initiatives as we continue to explore the exciting possibilities offered by distance-based methods in machine learning.

Happy attendees at the Distance-based learning workshop

- With thanks to the organisers of this event.

- Antonin Schrab presenting his research

- Student organiser, Masha Naslidynk presenting her research

AI Hackathon at Cumberland Lodge – Recap of Student Led Event

By sharon.betts, on 2 June 2023

We recently organised an AI hackathon, attended by both the members of our CDT and students from AI-focused CDTs at other universities. The hackathon was the main component of a two-night retreat hosted at Cumberland Lodge, a country house and conference venue in the beautiful Windsor Great Park. The event was student-led, and an exciting opportunity to explore new research directions, brainstorm start-up ideas, and build connections with other PhD students in the field.

During the hackathon we split into small groups, each working on their own projects which had been proposed in advance by the attendees. Lots of ambitious projects were suggested, and it was impressive to see them carried out successfully. These included a web app for language learners that uses speech recognition to judge and correct Mandarin tone pronunciation; an investigation into the capabilities of large language models for solving cryptic crosswords, culminating in a thrilling live demo; and mapping out gaps in the market for waste manipulation robotics start-up.

Most excitingly, a couple of the teams have decided to continue developing their projects after the event, with new apps and conference papers in the works!

In addition to the hackathon, the students attending from outside of the CDT in Foundational AI presented their PhD research during a poster session. G-Research also attended the retreat, kindly providing welcome drinks on the first night, and hosting a prize giving for their research competition. There were also ample opportunities for socialising over meals and in the bar, and exploring the sunny surroundings of the park.

Thank you to the CDT management for helping with organising the event, and all the attendees for making it a success. We hope to arrange something similar next year!

Authors

Oscar Key and Robert Kirk

Day Dream Believing? Thinking about World Models. By Rokas Bendikas

By sharon.betts, on 23 May 2023

I am interested in discussing an intriguing concept in machine learning, which promises to revolutionize the way we approach learning in robotics: World Models.

I am interested in discussing an intriguing concept in machine learning, which promises to revolutionize the way we approach learning in robotics: World Models.

At a high level, World Models aim to create a compact and controllable representation of the world. Think of it as a mental simulation or an internal mini-world where AI can experiment, explore, and ‘imagine’ different scenarios, all without the need for real-world interactions. It’s like creating a sandbox game for AI, where it can learn the ropes before stepping out into the real world. 🧠🕹

Let’s contrast this with the conventional end-to-end learning methods. These traditional approaches typically require vast amounts of real-world data and intensive training, which can be time-consuming, computationally expensive, and let’s face it, data-inefficient.

That’s where the beauty of World Models shines. By allowing AI to ‘dream’ or simulate possible scenarios in their internal model of the world, they can learn faster and more efficiently. They can plan and strategize better by running various ‘what-if’ scenarios within their world model. Imagine playing chess and being able to simulate all possible moves in your mind before making your move – that’s the advantage of World Models in a nutshell! 🎲🚀

The ‘DayDreamer’ paper is a fantastic resource if you’re keen to delve into the specifics of this innovative approach. It opens up new vistas in our quest for smarter and more data-efficient learning in robotics.

In a world where data is king but also a constraint, World Models are pioneering a path towards more strategic, efficient, and thoughtful AI. So, let’s continue learning, exploring, and innovating. After all, the future of AI is as exciting as we dare to imagine!

Close

Close