Earlier this week UCL hosted a one-day event entitled Reimagining Assessment and Feedback, the second in a series of Jisc events on Demonstrating digital transformation. The event was held in Bentham house, the main building for the UCL Faculty of Laws. The purpose these events is to share best practice from universities who have made significant advances in developing innovative approaches to taking forward their digital agenda. As with the Jisc framework for digital transformation, the events are designed to showcase and highlight the broad spectrum of activity needed across an institution to effectively support and implement a digital culture.

The event organising team , Simon Birkett (Jisc), Peter Phillips (UCL) and Sandra Lusk (UCL)

The event gave the 50+ delegates the opportunity hear how UCL has evolved its assessment and feedback practices and processes and the role technology plays. Here at UCL we have been at the forefront of the shift to digital assessment and have successfully implemented a digital assessment platform for all centrally managed assessments taken remotely. To achieve this we have needed to address other challenges including assessment design, consistency across programmes, regulations and policy, and enhanced support for professional development.

Opening plenary

The event was opened by Pro-Vice-Provost Education (Student Academic Engagement) Kathryn Woods who talked a little about our wider institutional change programme including the UCL strategic plan 2022-27 consultation and Education framework.

Simon Walker presents on assessment at UCL

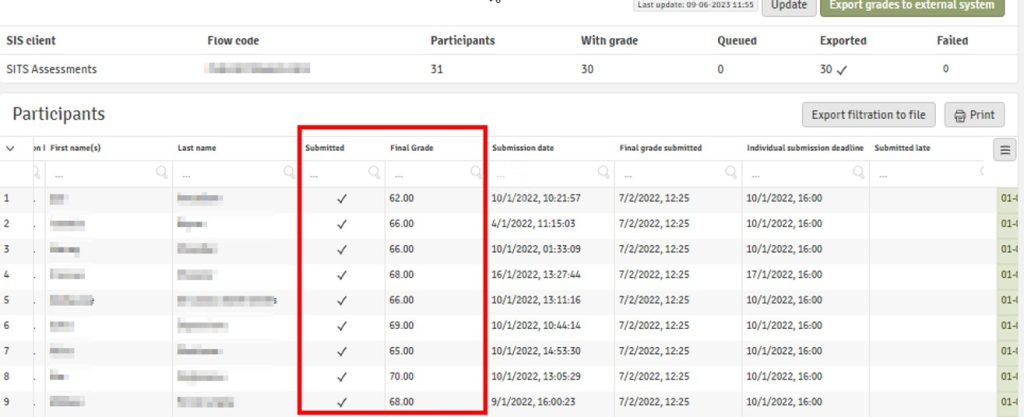

Professor Simon Walker (previously Director of Programme Development, UCL, now an educational consultant) and I provided an overview of the UCL assessment journey. We discussed the implementation of Wiseflow/AssessmentUCL and the subsequent challenges we have faced regarding AI and academic integrity. Although we haven’t resolved all the problems, we have encountered numerous challenges and have an valuable story to share. You can see our slides below.

Breakout groups

There were two sets of breakout group sessions on core themes with lunch slotted in between. Each session featured a UCL facilitator to give an opening introduction, a Jisc scribe to lead the related Padlet board and a UCL student to give the student perspective.

Demonstrating digital transformation – assessment futures

This session considered the potential future of assessment in Higher education. Participants looked at areas including AI, assessment technology and new ideas and ways of working in assessment. The group discussed a whole range of challenges from limited understanding of AI technology and capacity constraints, to AI false alerts, digital inequality, and ethical considerations. The solutions include student co-design, emphasis on assessment design, oral evaluations and better use of AI for formative assessments.

Demonstrating digital transformation – Academic integrity

Discussions in the academic integrity session

This session considered ways to ensure academic integrity is maintained across the institution through design, education and detection. It considered how policy and regulations need to change in the light of new challenges that technology brings. Much of the discussion covered current practices such as the use of AI proctoring for remote assessments, efforts to establish clear assessment requirements, and conducting fair assessment misconduct panels. The challenges include terminology clarification, legal concerns surrounding AI usage and the implications of more diverse assessment formats on identifying misconduct. Some of the effective strategies identified were additional training for students during the transition into higher education, varied assessment formats, technical approaches such as random question allocation and limited time allocation, and less punitive approaches to academic integrity such as hand holding through academic practice and referencing requirements.

Demonstrating digital transformation – Institutional change

Kathryn Woods facilitates the breakout session on managing institutional change

This session considered how institutions manage change and encourage new academic practices. The group looked at areas including framing of change and the balance between cultural and technological change. Some of the main challenges explored were around large cohorts, diverse student body, the digital skills of academic staff and general change fatigue. Some successful practices highlighted were feedback from externals and industry experts, personalised feedback at scale, external engagement for formative feedback and audio feedback. The support needed to enable this includes includes surfacing assessment and feedback technologies, integrating professional services into curriculum development teams, and providing timely tech and pedagogic support for staff.

Demonstrating digital transformation -Pedagogy and assessment design

This session considered the full assessment design process and focal points and drivers for different staff involved in making changes. The group looked at areas including what contemporary assessment design looks like: authentic, for social justice, reusable etc. Interesting practice includes assessment processes that focus on the production and process of assessment, the use of student portfolios for employability, co-designing assessments with employers and utilising creative and authentic assessments with tools like Adobe Creative Suite. The main challenges might be the impact of high-stakes assessment and grades on students, clarifying what is actually being assessed, aligning institutional priorities with assessment innovation and supporting group work assessments. Future support needed could involve utilising the Postgraduate Certificate (PG Cert) program to address assessment and curriculum design with technology and digital skills.

Demonstrating digital transformation -Larger cohorts and workloads

This session considered how you assess large cohorts in highly modularised programmes with large student cohorts from different disciplines. It looked at areas including workload models, interdisciplinary assessment, integrated assessment. There are already examples of interdisciplinary group work, using contribution marks to evaluate individual efforts in group work, implementing peer assessment, utilizing multiple-choice questions (MCQs), and employing marking teams for large cohorts. However these face challenges including PSRB accreditation processes, modularisation of assessments, over-assessment and duplication and scaling alternative assessment practices. One of the best approaches identified is programme-level assessment strategies and embracing the principle of “less is more” by focusing on quality rather than quantity.

Demonstrating digital transformation – Strategic direction

This session considered how you respond to the drivers of change and go about a co-design process across an institution by looking at environmental scanning, involving stakeholders, styles of leadership. The challenges identified involve ensuring continuity while managing future aspirations, considering student demographics and adopting an agile approach to strategy development. Clear communication about assessment and being agile in strategic thinking were identified as practices that work well. The support needed includes access to assessment platforms and curriculum mapping software, partnership support from industry organisations, and collaboration with Jisc and UCISA to advocate for change across the sector.

Panel session

The afternoon panel session on assessment, chaired by Sarah Knight, Head of learning and teaching transformation at Jisc featured a diverse group of experts representing academia and student engagement who all provided valuable insights.

Panel session: Mary McHarg, Dr Irene Ctori, Professor Sam Smidt, Dr Ailsa Crum, Marieke Guy, and Sarah Knight

- Professor Sam Smidt, the Academic Director of King’s Academy, KCL;

- Dr Irene Ctori, Associate Dean of Education Quality and Student Experience at City, University of London;

- Mary McHarg, SU Activities and Engagement Sabbatical Officer at UCL;

- Dr Ailsa Crum, Director of Membership, Quality Enhancement and Standards at QAA;

- Marieke Guy, Head of Digital Assessment at UCL

Each panellist introduced themselves, explained their roles and organisations, and outlined their current work on assessment. They then shared their key takeaways from the discussions and presentations of the day. These included the need to work together collaboratively as a sector and to look at more fundamental, areas such as curriculum design, as places where change could originate. Some also noted the absence of discussion around feedback, interesting given that NSS scores are very dependent on successful approaches here. The panel addressed important questions, including how higher education providers can better support students’ assessment literacy, ways universities can enable staff to effectively use technology for assessment and feedback, methods to engage in dialogue with PSRBs regarding technology in assessments, and predictions for the future of assessment methods in five years’ time. One of the most interesting questions thrown at the panel was what they would do to assessment if they had a magic want, much of the focus was on the current grading model and other areas such of potential such as improving assessment for students with adjustments by adding in optionality and better support.

The day concluded with an overview of the support available from Jisc provided by Simon Birkett, Senior Consultant (see slides). Tweets from the day can be accessed using the #HEdigitaltx tag. Many of the attendees were then treated to a bespoke UCL tour led by Steve Rowett, Head of the Digital Education Futures team. The highlight for many is Jeremy Bentham’s auto-icon.

It was great to bring together those working in strategic change across UK Higher Education in the area of assessment and feedback. Clearly there is much work to be done but a sector-wide understanding and appreciation of the difficulties faced, and a unified approach to ensuring quality of student experience and learning benefit can only be a good thing.

This article is reposted from the Digital Assessment Team blog.

Panel members

Close

Close