PeerWise – Report on Outcomes of e-Learning Development Grant#

by Sam Green and Kevin Tang

Department of Linguistics, UCL

Introduction

In February 2012, we obtained Teaching Innovation Grant funding to pilot the use of PeerWise on one module and would like to extend and embed its use. PeerWise is an online repository of multiple-choice questions that are created, answered, rated and discussed by students. PeerWise involves students in the formative assessment and feedback process and enables them to develop a number of key skills which will enhance the employability of our students, including negotiating meaning with others, cross-cultural communication, and analytical and evaluation skills as they engage with the work of their peers.

Overall aims and objectives

Since we had excellent feedback on our pilot course, we aimed to extend the use of PeerWise to another three large and diverse modules for 2012/13. In doing so we would investigate any accessibility issues as well as the possibility of integrating PeerWise with software used by our students, e.g. software to represent syntactic structures. The PGTAs would adapt the material developed in 2011/12 to provide guidelines and training and further support to PGTAs and academics staff running modules using PeerWise, and run introductory sessions for students. In addition, they would support the PeerWise assessment process, which in turn would contribute to the module marks. The PGTAs would also be involved in disseminating the project outcome and sharing good practice.

Methodology – Explanation of what was done and why

Introductory session with PGTAs:

A session was held prior to the start of term with PGTAs teaching on modules utilising PeerWise, run by the experienced PGTAs. This delivered information to PGTAs on the structure and technical aspects of the system, the implementation of the system in their module, and importantly marks and grading. This also highlighted the importance of team-work and explained the necessity of participation from both the teaching team and students. Materials were also provided in an introductory pack to new PGTAs to quickly adapt the system for their respective modules (see Dissemination below).

Introductory session with students:

Students taking modules with a PeerWise component were required to attend a two-hour training and practice workshop, run by the PGTAs teaching on their module. After being given log-in instructions, students participated in the test environment set up by the PGTAs. These test environments contained a range of sample questions (written by the PGTAs) relating to their modules. This demonstrated to the students the quality of questions and level of difficulty required, as well as the available use of media. More generally, students were given instructions on how to provide useful feedback, and how to create effective and educational questions, as well as being told about the requirements of their module.

Our PGTA – Thanasis Soultatis giving an introductory session to PeerWise for students

Our PGTA – Kevin Tang giving an introductory session to PeerWise for students

Dissemination (See below)

Course integration

In its second academic year, PeerWise was integrated with modules with the following requirements:

- compulsory for both BA and MA students (previously only BA)

- students were informed that they would work in teams to create questions

- work individually to answer questions

- workload divided into six weekly deadlines

- questions to be based on that week’s material

In the pilot implementation of PeerWise, BA but not MA students were required to participate. BA students showed more participation than MA students, but the latter nevertheless still showed engagement with the system. Therefore, it was decided to make PeerWise a compulsory element of the module to maximise the efficacy of peer-learning.

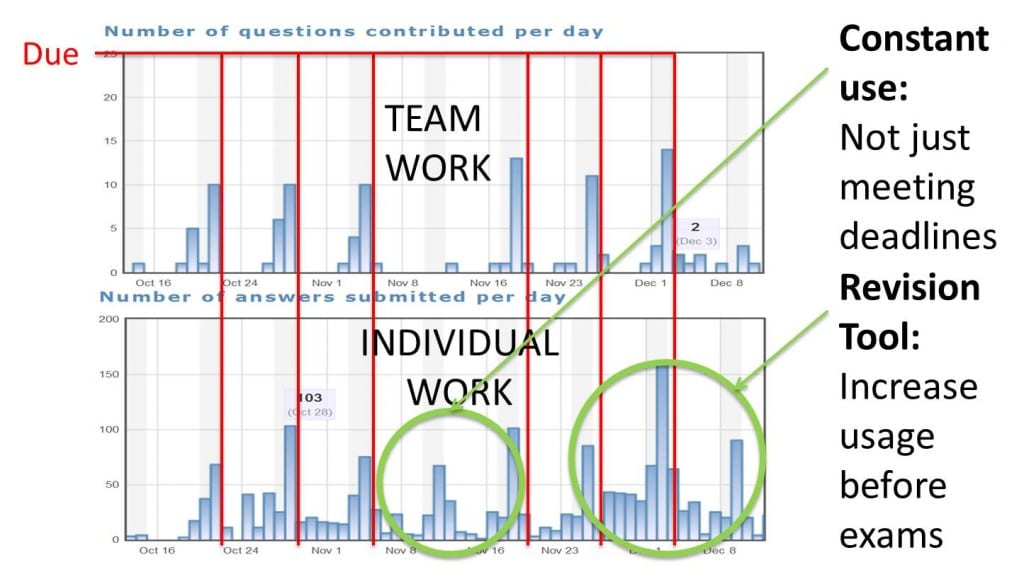

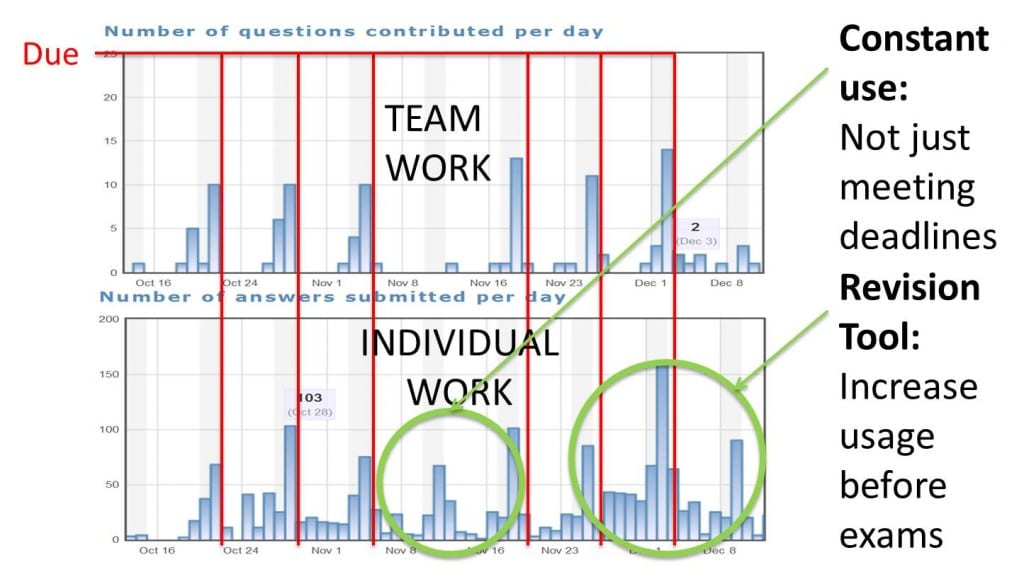

Following meetings with CALT member Dr. Rosalind Duhs, it was decided that students should work in ‘mixed ability’ groups, due to the difficult nature of creating questions. However, to effectively monitor individual performance, questions were required to be answered individually. Deadlines ensured that students engaged with that week’s material throughout the course; this simultaneously spread out the workload and resulted in an effective revision tool (see below for use of PeerWise for revision by MA students).

Technical improvement

PeerWise allows images to be hosted, but this is restricted by the size of the image. Embedding of YouTube clips is an option, but there is no capability to embed/upload sound or video files directly to PeerWise. To circumvent this, a conversation with Domi Sinclair (ELE services) revealed that we could use an existing UCL system to host and link media not supported by PeerWise. All UCL students have a ‘MyPortfolio’ account, and this is customisable to allow external links etc. which can be anonymised (for MCQ usage). Instructions were provided to PGTAs to pass on to students.

Project outcomes – Description and examples of what was achieved or produced

To evaluate the outcomes of the project, we used the data clearly provided by PeerWise for analyses of students’ performance.

Active Engagement

Using the PeerWise administration tools, it was possible to observe student participation over time. This revealed that students met question creation deadlines as required, but not always in a rushed manner; they often worked throughout the week to complete the weekly task. In addition, questions were answered throughout the week, revealing that students didn’t appear to see the task purely as a chore. Further to this, most students answered more than the required number of questions, again showing their willing engagement. The final point on deadlines was that the MA students (who had an exam as the final part of the module assessment) used PeerWise as a revision tool entirely by their own choices. Because they had created regular questions throughout the course of the module, they had created a repository of revision topics with questions, answers, and explanations

Active Engagement

Correlations with module performance

PeerWise provided a set of PeerWise scores which is composed of individual scores for question writing, answering questions and rating existing questions. To increase the total score, one needs to achieve good scores for each component.

The students should:

- write relevant, high-quality questions with well thought out alternatives and clear explanations

- answer questions thoughtfully

- rate questions fairly and leave constructive feedback

- use PeerWise early as the score increases over time based on the contribution history

Correlations between the PeerWise scores and the module scores were performed to test the effectiveness of PeerWise on student’s learning. A nested model comparison was performed to test the effectiveness of the PeerWise grouping in prediction of the students’ performance. The performance in Term 1 differ somewhat between the BA students and MA students, but not in Term 2 after manipulations with the PeerWise grouping with the BAs.

Term 1:

The BA students showed no correlation at all, while the MAs showed a strong correlation (r = 0.49, p < 0.001***)

MA Students – Term 1 – Correlation between PeerWise Scores and Exam Scores

In light of this finding, we attempted to identify the reasons behind this divergence in correlation. Having consulted with the TAs who allocated the PeerWise groups (three students per group), it was found that the grouping with the BAs was done randomly, rather than by mixed-ability, while the grouping with the MAs was done by mixed-ability. We, therefore, hypothesized that mixed-ability grouping is essential to the successful use of the system. To test this hypothesis, we asked the TA for the BAs to regroup the PeerWise group based on mixed-ability, this TA did not have any knowledge of the students’ Peerwise scores in Term 1, while the PeerWise grouping for the MAs largely remained the same.

Term 2:

The assignment in Term 2 were based on three assignments spread out over the term. The final PeerWise score (taken at the end of the Term 2) was tested for correlation with each of the three assignments – Assignment 1, 2 and 3.

Three correlation analyses were performed between the PeerWise score with each of the three assignments.

With the BAs, the PeerWise score correlated with all three assignments with increasing levels of statistical significance – Assignment 1 ( r = 0.44, p = 0.0069**), Assignment 2 ( r = 0.47, p = .0.0040*) and Assignment 3 ( r = 0.47, p = .0.0035**).

With the MAs, the findings were similar, with the difference that Assignment 1 was insignificant with a borderline p-value of 0.0513 – Assignment 1 ( r = 0.28, p = 0.0513), Assignment 2 ( r = 0.46, p = 0.0026**) and Assignment 3 ( r = 0.33, p = 0.0251**).

The effect of PeerWise grouping

A further analysis was performed to test if the PeerWise grouping has an effect on their assignment performance. We did this by doing a nested-model comparison with PeerWise score and PeerWise Group as predictors and the mean assignment scores as the predictee. The lm function in R statistical package was used to build two models, the superset model which has both PeerWise score and PeerWise Group as the predictors, and the subset model which has only the PeerWise score as the predictor. An ANOVA was used to compare the two models, and it was found that while both PeerWise scores and PeerWise grouping were significant predictors in the superset model, PeerWise grouping made a significant improvement in prediction with p < 0.05 * (See the table below for the nested-model output)

Analysis of Variance Table

Model 1: Assignment_Mean ~ PW_score + group

Model 2: Assignment_Mean ~ PW_score

Res.Df RSS Df Sum of Sq F Pr(>F)

1 28 2102.1

2 29 2460.3 -1 -358.21 4.7713 0.03747 *

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Conclusion for the project outcomes

The strong correlation found with the BA group in Term 2 (but not in Term 1) is likely to be due to the introduction of mixed-ability grouping. The group effect suggests that the students performed at a similar level as a group, this therefore implies group learning. This effect was only found with the BAs but not with the MAs, this difference could be attributed to the quality of the mixed-ability grouping, since the BAs (re)grouping was based on Term 1 performance, while the MA grouping was based on the impression on the students that the TA had in the first two weeks of Term 1. With the BAs and MAs, there’s a small increase of correlation and significance level over the term, this might suggest that the increasing use of the system improves their assignment grades over the term.

Together these findings suggest that mixed-ability grouping is key to peer learning.

Evaluation/reflection:

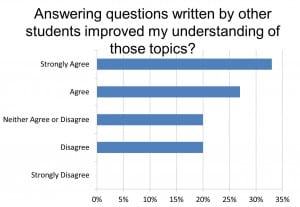

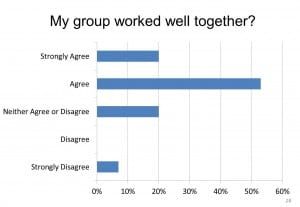

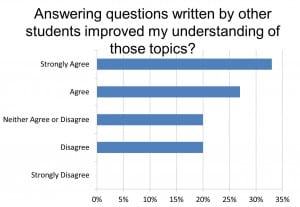

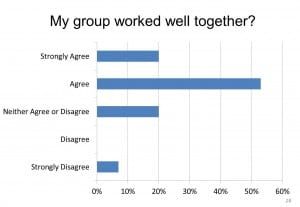

A questionnaire was completed by the students about their experience with our implementation of PeerWise. The feedback was on the whole positive with a majority of students agreeing that

- Developing original questions on course topics improved their understanding of those topics

- Answering questions written by other students improved their understanding of those topics.

- Their groups worked well together

These highlighted the key concept of PeerWise – Peer Learning

How did the project enhance student learning?

Our objective statistical analyses together with the subjective feedback from the students themselves strongly indicated that the project enhanced student learning.

How did the outcomes compare with the original aims?

Enquiries to the PeerWise development team revealed that there are unfortunately no options for accessibility for users with e.g. poor sight. General suggestions were made to these students on ways to increase the screen viewing size, but this remains an issue, albeit mostly with the PeerWise development team.

PGTAs and also course organisers attended the training provided, and found it beneficial enough to utilise PeerWise as a component on their module. The experienced PGTAs remained available for assistance, although the user guide proved to answer most questions for new users. The above graphs and tables display some of the successes regarding students’ progress through their modules using PeerWise.

One highly useful and partly unexpected beneficial outcome was the subsequent creation of a revision system for students taking exams at the end of the module, made out of the questions, answers, and explanations they submitted as part of their PeerWise participation. As explained above, this repository of information was used by students in their preparation. Further, this collection of questions and answers aimed at (indeed, made by) student-level comprehension provides a large ‘databank’ of questions and answers, along with explanations, within topics for staff to utilise in subsequent years. Difficulties in question creation and answering, and common mistakes in understanding being made by students can also be spotted and dealt with along the course of the module.

As mentioned below, ‘dissemination’ of experiences and introductory information on using PeerWise was successful and well-received.

How has the project developed your awareness, understanding, knowledge, or expertise in e-learning?

One important experience is the recognition that peer learning – using e-learning – can be a highly effective method of learning for students, even with low amounts of any regular and direct contact from PGTAs to students regarding their participation. The statistics above reveal this nicely.

However, although we deliberately took a ‘hands-off’ approach, we found it necessary to be considerate of the aims of the modules, understand the capabilities of PeerWise and it’s potential for integration with the module, and importantly to plan in detail the whole module’s use of PeerWise from the beginning. Initiating this type of e-learning system required this detailed investigation and planning in order for students to understand the requirements and the relationship of the system to their module. Without explicit prior planning, with teams working in groups and remotely from PGTAs and staff (at least, with regards their PeerWise interaction), any serious issues with the system and its use may not have been spotted and/or may have been difficult to counteract. Because of the consideration however, serious problems didn’t arise in the system’s use.

As mentioned, the nature of the work (remotely from staff and in groups) meant that students might not readily inform us of issues they may have been having, so any small comment was dealt with immediately. One issue that arose was group members’ cooperation; this required swift and definitive action, which was then communicated to all relevant parties. In particular, any misunderstandings with the requirements were dealt with quickly, with e-mails sent out to all students, even if only one individual or group expressed concern.

Dissemination- How will the project outputs of results be disseminated to the department, College or externally? Are there other departments which would find value in the project outputs or results?

During the implementation of PeerWise under the e-Learning Development Grant, a Moodle site for staff and PGTAs was created with the help of Stefanie Anyadi, Teaching and Learning Team Manager at PALS. This hosts presentations by other universities using PeerWise; a detailed report on the implementation at UCL (in the Department of Linguistics); a user guide for staff, PGTAs, and students; and a ‘package’ for easy setting up of PeerWise for any module. To disseminate and publicise this information, a talk was given by Kevin Tang and Sam Green (the original PGTAs working with PeerWise) introducing the system to to staff within the Division of Psychology and Language Sciences. This in turn was video recorded as a ‘lecturecast’, and is also available on the Moodle page. Subsequently, as part of CALT’s “Summits and Horizons” lunchtime talks, thanks to Dr Nick Grindle, an invited updated talk was provided to those interested in teaching with e-learning, with a focus on peer-feedback, which was also video recorded.

The dissemination materials can be found here:

Scalability and sustainability – How will the project continue after the ELDG funding has

discontinued? Might it be expanded to other areas of UCL?

The division-wide presentation mentioned above advertised the use and success of PeerWise to several interested parties, as did the CALT lunchtime talk. As the experienced PGTAs have written their experiences in detail, have created a comprehensive user-guide, included presentations for students and new administrators of PeerWise, and made this readily-available for UCL staff and PGTAs, the system can capably be taken up by any other department. Further, within the Department of Linguistics there are several ‘second-generation’ PGTAs who have learned the details of, and used, PeerWise for their modules. These PGTAs will in turn pass on use of the system to the subsequent year, should PeerWise be used again; they will also be available to assist any new users of the system. In sum, given the detailed information available, and current use of the system by the Department of Linguistics, as well as the keen use by staff in the department (especially given the positive results of its uptake), it seems highly likely that PeerWise will continue to be used by several modules, and will likely be taken up by others.

Acknowledgements

Close

Close