Education neuroscience: giving teachers smarter information – not just tomorrow but today

By Blog Editor, IOE Digital, on 29 June 2018

Michael Thomas.

I could perhaps have been forgiven for viewing with some trepidation the invitation to address a gathering of artificial intelligence researchers at this week’s London Festival of Learning. At their last conference, they told me, they’d discussed my field – educational neuroscience – and come away sceptical.

They’d decided neuroscience was mainly good for dispelling myths – you know the kind of thing. Fish oil is the answer to all our problems. We all have different learning styles and should be taught accordingly. I’m not going to go into it again here, but if you want to know more you can visit my website.

The AI community sometimes sees education neuroscience mainly as a nice source of new algorithms – facial recognition, data mining and so on.

But I came to see this invitation as an opportunity. There’s lots that’s positive to say about neuroscience and what it can do for teachers – both now, and in the future.

Let’s start with now. There are already lots of basic neuroscience findings that can be translated into classroom practice. Already, research is helping us see the way particular characteristics of learning stem from how the brain works.

Here’s a simple one: we know the brain has to sleep to consolidate knowledge. We know sleep is connected to the biology of the brain, and to the hippocampus, where episodic memories are stored. The hippocampus has a limited capacity – around 50,000 memories- and would fill up in about three months. During sleep we gradually transfer these memories out of the hippocampus and store them more permanently. We extract key themes from memories to add to our existing knowledge. We firm up new skills we’ve learnt in the day. That’s why it’s important to sleep well, particularly when we’re learning a skill. This is a simple fact that can inform any teacher’s practice.

And here’s another: We forget some things and not others – why? Why might I forget the capital of Hungary, when I can’t forget that I’m frightened of spiders? We now know the answer – phobias involve the amygdala, a part of the brain which operates as a threat detector. It responds to emotional rather than factual experiences, and it doesn’t forget – that’s why we deal with phobias by gradual desensitisation, over-writing the ‘knowledge’ held in the amygdala. Factual learning is stored elsewhere, in the cortex, and operates more on a ‘use it or lose it’ basis. Again, teachers who know this can see and respond to the different ways that children learn and understand different things.

And nowadays, using neuro-imaging techniques, we can actually see what’s going on in the brain when we’re doing certain tasks. So brain scans of people looking at pictures of faces, of animals, of tools and of buildings show different parts of the brain lighting up.

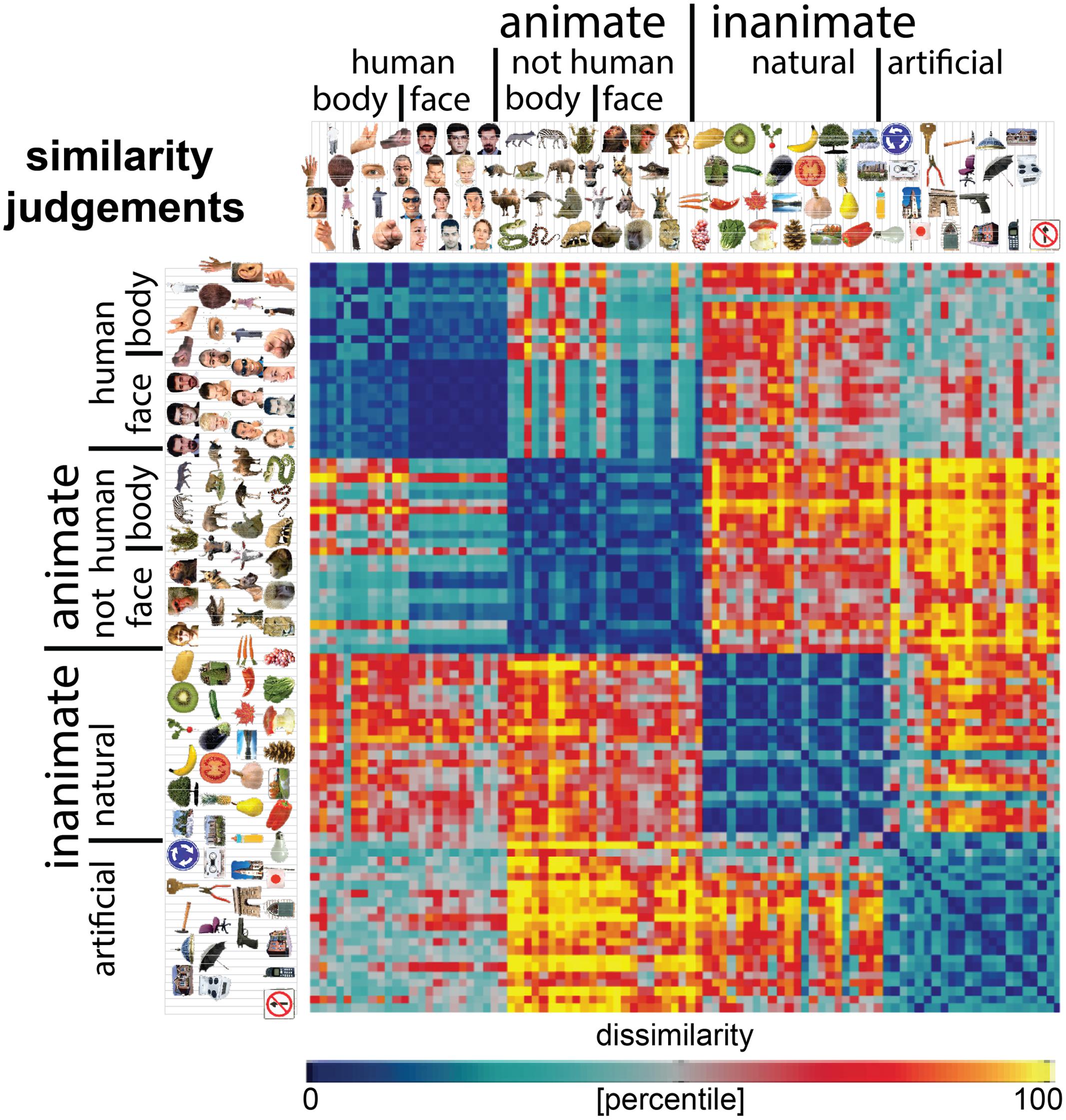

This figure represents human rated similarity between pictures:

The visual system is a hierarchy, with a sequence of higher levels of processing – so called ‘deep’ neural networks. At the bottom level (an area called V1), brain activity responds to how similar the images are. It might respond similarly to a picture of a red car and a red flower, and differently to a picture of a blue car. Higher up the hierarchy, the brain activity responds to the different categories images are in (for example, in the inferior temporal region). Here, the patterns for a red car and a blue car will look more similar because both are cars, and different to the pattern for a red flower. This kind of hierarchical structure is now used in machine learning. It’s what Google’s software does – Is it a kitten or a puppy? Is John in this picture?

Again, teachers who know that different parts of the brain do different things can work with that knowledge. Brain science is already giving schools simple techniques – Paul Howard Jones at the University of Bristol has devised a simple three-step cycle to understand how the brain learns, based on what we now know: Engage; Build, Consolidate.

But there’s more – by bringing together neuroscience and artificial intelligence, we can actually build machines which can do things we can’t do. At a certain level, the machines become more accurate at doing things than humans are – and they can assimilate far greater amounts of information in a much shorter time.

I can foresee a day – and in research terms it isn’t far off – when teachers will be helped by virtual classroom assistants. They’ll use big data techniques – for example, collecting data anonymously from huge samples of pupils so that any teacher can see how his or her pupils’ progress matches up.

In future, teachers might wear smart glasses so they can receive real-time information – which child is having a problem learning a particular technique, and why? Is it because he’s struggling to overcome an apparent contradiction with something he already knows – or has his attention simply wandered? Just such a system has been showcased at the London Festival of Learning this week, in fact.

Of course we have to think carefully about all this – particularly when it comes to data collection and privacy. But it’s possible that in future a machine will be able to read a child’s facial expression more accurately than a teacher can- is he anxious, puzzled, or just bored? Who’s being disruptive, who’s not applying certain rules in a group activity? Who’s a good leader?

This is not in any sense to replace teachers – it’s about giving them smarter information. Do you remember how you felt when your teacher turned to the blackboard with the words: ‘I’ve got eyes in the back of my head, you know?’ You didn’t believe it, did you? But in future, with a combination of neuroscience and computer science, we can make that fiction a reality.

Professor Michael Thomas is Director of the Centre for Educational Neuroscience, which is jointly run from Birkbeck, UCL Institute of Education and UCL.

Photo: neurons by Mike Seyfang via Creative Commons

Close

Close