Vision for Art (VISART) Workshop for interdisciplinary work in Computer Vision and Digital Humanities

By Lucy Stagg, on 26 April 2022

The VISion for Art (VISART) workshop is an interdisciplinary workshop held with the European Conference on Computer Vision (ECCV) on a bi-annual basis. The workshop is now on its 6th edition and has had great success over ten years since starting in Florence (2012), with the 2022 edition in Tel Aviv, Israel. The success has led to VISART becoming a staple venue for Computer Vision and Digital Art History & Humanities researchers alike. With the workshop’s ambition to bring the disciplines closer and provide a venue for interdisciplinary communication, it has, since 2018, provided two tracks for both the technological development and the reflection of computer vision techniques applied to the arts. The two tracks are:

1. Computer Vision for Art – technical work (standard ECCV submission, 14 pages excluding references)

2. Uses and Reflection of Computer Vision for Art (Extended abstract, 4 pages, excluding references)

Full details are available at the workshop website: https://visarts.eu

Keynotes

In addition to the technical works presented it regularly attracts names that bridge the disciplines including (but not limited to):

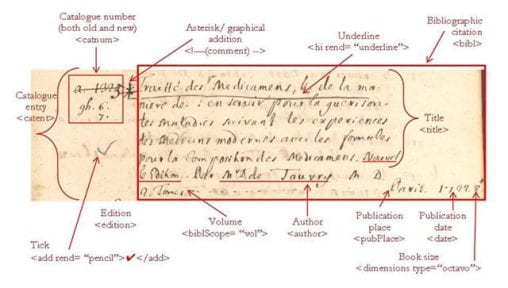

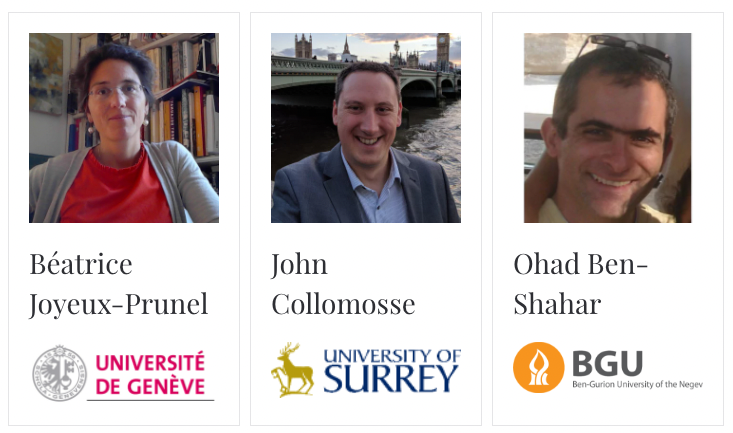

Keynote speakers from across the years of VISART, images from 2022 public institutional profiles and current affiliation logos.

The inclusion of such a varied collection of Keynote speakers has provided a fruitful discussion on the use of technology to investigate visual content. From its style and perception (Aaron Hertzmann, Adobe) to how is the “hard humanities” field of computer image analysis of art changing our understanding of paintings and drawings (David G. Stork).

VISART VI Keynotes

The VISART VI (2022) workshop continues this tradition of high profile keynotes. It will add Prof Béatrice Joyeux-Prunel of the University of Geneva and Prof Ohad Ben-Shahar of Ben Gurion University to this list and the return of Prof John Collomosse of the University of Surrey.

VISART VI Keynotes

Prof Béatrice Joyeux-Prunel

Béatrice Joyeux-Prunel is Full Professor at the University of Geneva in Switzerland (Faculté de Lettres – School of Humanities), chair of Digital Humanities. From 2007 to 2019 she was Associate Professor (maître de conférences) in modern and contemporary art at the École normale supérieure in Paris, France (ENS, PSL). She is a former student of ENS (Alumni 1996, Social Sciences and Humanities), and got an Agrégation in History and Geography in 1999. She defended her PhD in 2005 at the université Paris I Panthéon Sorbonne and her Habilitation at Sciences Po Paris in 2015. Joyeux-Prunel’s research encompasses the history of visual globalisation, the global history of the avant-gardes, the digital technologies in contemporary art, and the digital turn in the Humanities. Since 2009 she has founded and managed the Artl@s project on modern and contemporary art globalisation ([https://artlas.huma-num.fr](https://artlas.huma-num.fr/)) and she coedits the open access journal Artlas Bulletin. In 2016 she founded Postdigital ([www.postdigital.ens.fr](https://visarts.eu/www.postdigital.ens.fr)), a research project on digital cultures and imagination. Since 2019 she has led the European Jean Monnet Excellence Center IMAGO, an international center for the study and teaching on visual globalisation. At Geneva university she directs the SNF Project Visual Contagions ([https://visualcontagions.unige.ch](https://visualcontagions.unige.ch/)), a 4 years research project on images in globalisation, which uses computer vision techniques to trace the global circulation of images in printed material over the 20th century.

Prof John Collomosse

John Collomosse is a Principal Scientist at Adobe Research where he leads the deep learning group. John’s research focuses on representation learning for creative visual search (e.g. sketch, style, pose based search) and for robust image fingerprinting and attribution. He is a part-time full professor at the Centre for Vision Speech and Signal Processing, University of Surrey (UK) where he founded and co-directs the DECaDE multi-disciplinary research centre exploring the intersection of AI and Distributed Ledger Technology. John is part of the Adobe-led content authenticity initiative (CAI) and contributor to the technical work group of the C2PA open standard for digital provenance. He is on the ICT and Digital Economy advisory boards for the UK Science Council EPSRC.

Prof. Ohad Ben-Shahar

Ohad Ben-Shahar is a Professor of Computer Science at the Computer Science department, Ben Gurion University (BGU), Israel. He received his [B.Sc](http://b.sc/). and [M.Sc](http://m.sc/). in Computer Science from the Technicon (Israel Institute of Technology) in 1989 and 1996, respectively, and his M.Phill and PhD From Yale University, CT, USA in 1999 and 2003, respectively. He is a former chair of the Computer Science department and the present head of the School of Brain Sciences and Cognition at BGU. Prof Ben-Shahar’s research area focuses on computational vision, with interests that span all aspects of theoretical, experimental, and applied vision sciences and their relationship to cognitive science as a whole. He is the founding director of the interdisciplinary Computational Vision Laboratory (iCVL), where research involves theoretical computational vision, human perception and visual psychophysics, visual computational neuroscience, animal vision, applied computer vision, and (often biologically inspired) robot vision. He is a principle investigator in numerous research activities, from basic research animal vision projects through applied computer vision, data sciences, and robotics consortia, many of them funded by agencies such as the ISF, NSF, DFG, the National Institute for Psychobiology, The Israeli Innovation Authority, and European frameworks such as FP7 and Horizon 2020.

Call for Papers

The workshop calls for papers on the topics (but not limited to):

- Art History and Computer Vision

- 3D reconstruction from visual art or historical sites

- Multi-modal multimedia systems and human machine interaction

- Visual Question & Answering (VQA) or Captioning for Art

- Computer Vision and cultural heritage

- Big-data analysis of art

- Security and legal issues in the digital presentation and distribution of cultural information

- Image and visual representation in art

- 2D and 3D human pose and gesture estimation in art

- Multimedia databases and digital libraries for artistic research

- Interactive 3D media and immersive AR/VR for cultural heritage

- Approaches for generative art

- Media content analysis and search

- Surveillance and Behaviour analysis in Galleries, Libraries, Archives and Museums

Deadlines & Submissions

- Full & Extended Abstract Paper Submission: 27th May 2022 (23:59 UTC-0)

- Notification of Acceptance: 30th June 2022

- Camera-Ready Paper Due: 12th July 2022

- Workshop: TBA (23-27th October 2022)

- Submission site: https://cmt3.research.microsoft.com/VISART2022/

Organisers

The VISART VI 2022 edition of the workshop has been organised by:

- Alessio Del Bue, Istituto Italiano di Tecnologia (IIT)

- Peter Bell, Philipps-Universität Marburg

- Leonardo Impett, University of Cambridge

- Noa Garcia, Osaka University

- Stuart James, Istituto Italiano di Tecnologia (IIT) & University College London Centre for Digital Humanities (UCL DH)

VISART VI 2022 organisers

Close

Close