The art of unseeing

By Oli Usher, on 16 February 2015

While astronomers expend a lot of effort trying to see things better – building ever more powerful telescopes that can detect even the faintest, most distant objects – they are occasionally faced with the opposite problem: how to unsee things that they don’t want to see.

A group of scientists led by UCL’s Emma Chapman is working on methods to solve this problem for a new radiotelescope that is currently under development. In so doing, they could help give us our first pictures of a crucial early phase in cosmic history.

How to avoid seeing things you don’t want to see is a particular problem for cosmologists – the scientists who study the most distant parts of the Universe. Many of the objects and phenomena that interest them are faint, and lie hidden beyond billions of light years of gas, dust and galaxies. To make matters even more difficult, telescopes are flawed too – the data from them is not perfectly clean, which is manageable when you’re looking at relatively bright and clear objects – but a serious problem when you’re looking at the faint signature of something very far away.

Observing these phenomena is much like looking at a distant mountain range through a combination of a filthy window, a chain-link fence, some rain, clouds… and a scratched pair of glasses. In other words, you’re unlikely to see very much at all, unless you can somehow find a way to filter out all the things in the foreground.

***

The Square Kilometre Array (SKA) is a new radiotelescope, soon to begin construction in South Africa and Australia. The SKA will use hundreds of thousands of interconnected radio telescopes spread across Africa and Australia to monitor the sky in unprecedented detail and survey it thousands of times faster than any current system.

Artist’s impression of the South African site of the Square Kilometer Array. Credit: SKA Organisation (CC BY)

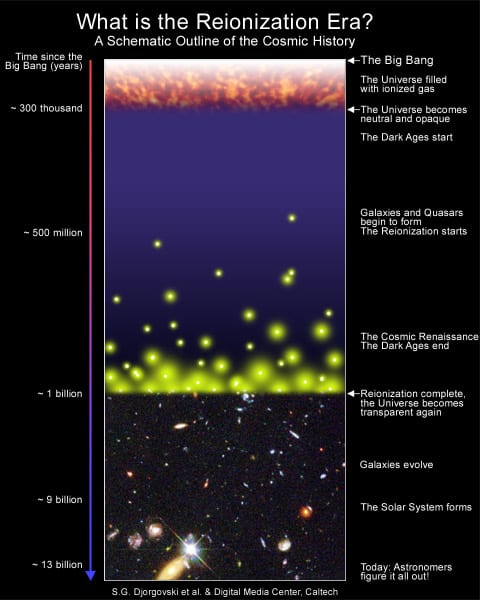

One of its objectives is to make the first direct observations of a brief phase of a few hundred million years in cosmic history known as the ‘era of reionisation’. This technical term conceals something quite dramatic: a profound and relatively sudden transformation of the whole Universe, which led to the space between galaxies being fully transparent to light as it is today.

In the Big Bang, all the matter and energy in the universe came into being. The matter was a hot, thick and opaque gas overwhelmingly made up of protons and electrons, with a smaller amount of helium and lithium nuclei (which are in turn made of protons and neutrons).

The hot gas was an ionised plasma: rather than whole atoms or molecules, in which the negatively charged electrons are bound into orbits around the positively charged nuclei, in a plasma, there is sufficient energy for the electrons to avoid capture and travel freely. (Ionised plasmas are not particularly exotic – we see them in everyday life when gases are energised, for instance lightning bolts, the gas inside energy saving light bulbs and the electric arc in a welder.)

Around 300,000 years after the Big Bang, the Universe had cooled enough for the protons, electrons and nuclei to come together. The Universe changed from a plasma into a normal gas. Like super-cooled water suddenly turning to ice, the electrons fell into orbits around the protons to form hydrogen atoms, and the helium and lithium nuclei to form helium and lithium atoms.

The Universe was now transparent to most wavelengths of light, but, aside from the fast-fading afterglow of the Big Bang, there was none: the tiny fluctuations in density present in the Big Bang were gradually making the distribution of gas in the universe more uneven, but nowhere had it reached anything like the density necessary to collapse into balls of matter dense enough to trigger nuclear fusion – or stars, as they are better known.

Over the billion years that followed, the first stars formed, and the stars came together into ever growing galaxies, but the Universe was still a dramatically different place from how it is now. It was much smaller – it has expanded for a further 13 billion years since then. The first stars were bigger, brighter and chemically less complex than those that dominate the Milky Way. The galaxies were smaller and uneven, and yet to accrete into the majestic spiral galaxies of today.

Finally, the properties of the incredibly thin gas that lay between galaxies – known to astronomers as the intergalactic medium – were different to those we see today. The atoms of hydrogen, helium and oxygen, formed 300,000 years after the Big Bang still dominated intergalactic space.

But not for long: the energy radiated by the first stars, galaxies and quasars coaxed the electrons in these atoms back out of their orbits, turning the intergalactic medium back into an ionised plasma. This was another profound, universe-wide change. Unlike the plasma just after the Big Bang, this plasma was thin enough to be transparent to light.

Around each source of radiation, bubbles of plasma grew, joining together into a Swiss cheese-like structure of vast cavities, before fully clearing the fog of non-ionised gas. This transformation, which occurred over a few hundred million years, produced the fully transparent Universe we see today.

Astronomers know that this process happened, and they have been able to detect the presence of the non-ionised gas in the early Universe, but no telescope before has had the sensitivity or the resolution to see the Swiss cheese pattern itself.

Being able to see back to this era would be a scientific triumph, as well as telling us more about the conditions present during the Universe’s infancy. It might also give clues as to what the main source of this radiation was: starlight, or emissions from quasars – exceptionally bright discs that surround large black holes in Galaxy centres – a question that remains open at present and which would tell us about the composition of the Universe at that early time.

***

Astronomers’ long wait to see this ‘Swiss cheese’ should soon be over. The SKA telescope will be powerful enough to see back to this period in cosmic history, and because of a particularity of the light coming from the Swiss cheese, it should even be able to make a movie of its evolution over time.

Non-ionised hydrogen gas emits a very specific wavelength of radiation because of the spin of its particles. When first emitted, this has a wavelength of 21cm , but as it travels through space, and the Universe expands, the wavelength gets gradually stretched. By tuning in to progressively longer wavelengths, a radiotelescope can trace the presence of hydrogen gas in the universe at progressively greater distances and greater remoteness in time.

But first, scientists need a reliable way to filter out everything that’s in the foreground. There is enough trouble doing this in visible light – if you look anywhere in the sky with a sensitive enough telescope, you’ll find out that there are vast numbers of galaxies between us and the edge of the visible Universe.

A several-week-long exposure of a completely black piece of sky around a tenth the diameter of the Moon, reveals over 10,000 galaxies in this Hubble image. If you are looking to the edge of the observable Universe, there is always something else in the foreground. Credit: NASA (public domain)

At radio wavelengths, this is even worse, partly because radiotelescopes do not produce the clear signal that optical telescopes do, and partly because more things emit at radio wavelengths or interfere with them. (Astronomers have even had to develop means of dealing with cows in fields next to their radiotelescopes damping down their observations.)

The team has been working on how to clean up the signal from SKA so that the faint traces of the early Universe can be seen despite the vast amount of irrelevant data in the foreground.

***

SKA will not produce pictures like optical telescopes do. Rather, for every bit of sky, it will provide a breakdown of the wavelengths that contribute to the signal. For each piece of sky, this is essentially a graph showing how intense the contribution from each wavelength is. (This information can later be processed into an image.)

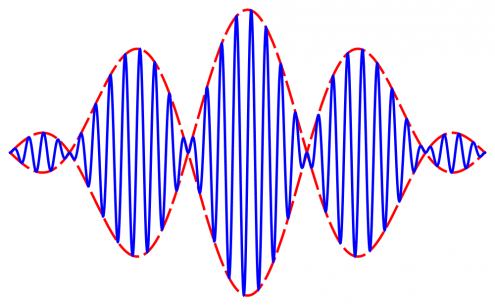

This image shows how a wave packet can be broken down into two different scales: one in blue, one in red. The SKA would render this signal as a simple bar chart with a red bar showing the contribution of the red wave, and a blue bar showing the contribution of the blue wave

The question astronomers are trying to answer is: faced with this breakdown of wavelengths, how can we tell which ones to discard, and which ones are genuinely part of the signal coming from the 21cm radiation?

This would be similar to looking at an audio recording of a band on an oscilloscope, and trying to figure out which part of the sound came from the drummer, rather than from the guitars.

You could try to isolate the sound of the drums based on how they sound: removing the comparatively smooth sound of the guitars, leaving only the jagged waveforms of the drums.

You could look at how complex the sounds of the different instruments are, for instance the timbre and harmonics in their tone, and use that to try to separate the (relatively simple) waveforms of the guitars from the more complex ones of the drums.

You could even simply discard the parts of the waveforms that correspond to the notes played by the guitars, leaving only the drums – though in the process, you would probably also discard some of the sound coming from the drummer.

For Emma Chapman and her team, isolating the signal from the early universe is much the same. Their recently published study shows how effective various methods are at discarding the unwanted data. The methods they explore involve varying amounts of educated guesswork – and have varying results – but in practice, all are likely to be used in combination once the SKA is actually in use.

The method with the most guesswork is also the simplest. The foreground data that the scientists are trying to eliminate is generally smoother than the jagged distribution from the Swiss cheese pattern. The method is just to pick a simple, smooth mathematical curve that matches the outlines of the data well. Subtract that, and you are left only with the much weaker spikes that are overlaid on this. These spikes are the contribution to the signal from the distant Swiss cheese structure.

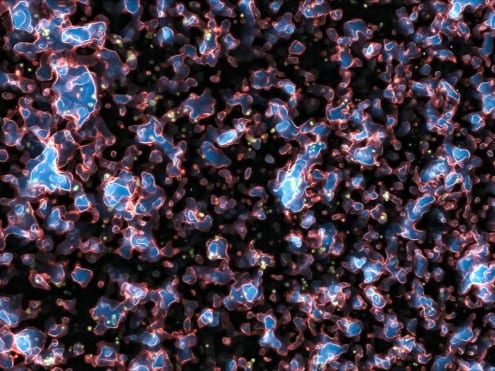

The pockets of ionised gas form a ‘Swiss cheese’ structure. The signal coming from it has different properties from the galaxies in the foreground, but separating the two is a challenge. Credit: ESO, M. Alvarez, R. Kaehler, and T. Abel (CC BY)

Using a bit less guesswork, you can once again assume that the foreground data is described by a smooth curve, but instead of picking it yourself, get a computer to derive the most plausible one. You can also modify this approach, and instead of telling the computer to find a smooth curve, you can tell it to find a function which is more likely to be smooth – but which is only as smooth as makes sense for the data, thereby deleting a bit more data and leaving only the most uneven spikes in intensity.

The team also looked at a few approaches which did not rely on the smoothness of the signal coming from objects in the foreground.

One method is to simply discard parts of the spectrum which are known to be associated with the foreground objects (even if there are also background signals there), leaving only part of the signal. The advantage here is that the parts of the spectrum you are left with are definitely clean and uncontaminated – but the drawback is that you have to discard a lot of good data along with the bad.

Finally, you can recover much of the background data without having to assume that the foreground data is smooth. Instead, you can look at the complexity of the data. Foreground objects, as well as generally having a smooth distribution of wavelengths associated with them, are also quite simple: they are made up of a small number of component scales.

The signal from the era of reionisation is complex, and made up of a large number of wavelengths. This means that an algorithm that filters out and deletes any simple signal – regardless of its smoothness – will remove the foreground and reveal the background. (This is much like comparing the pure, clean sound of a clarinet, and the complex, muffled sound of a kettle drum that barely sounds like a single tone.)

This method, the team says, is particularly promising.

Chapman’s team tested the effectiveness of these methods using a method similar to the blind trials used in medicine. They created a fake signal, comprising both a simulated image of the Swiss-cheese structure of the era of reionisation, and the contamination caused by foreground objects. They then asked different scientists, who had not seen the simulated image, to try out the different methods of recovering the background signal.

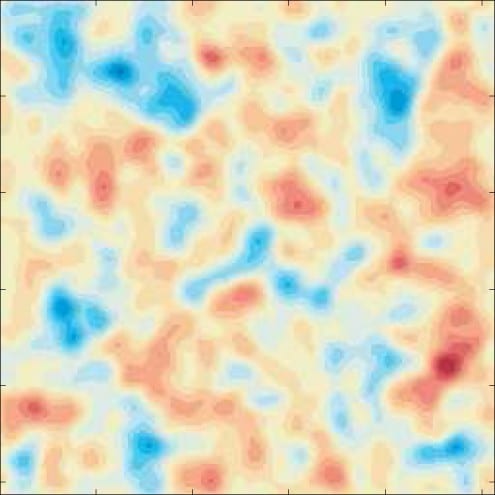

A simulated signal representing the Era of Reionisation ‘Swiss cheese’, used in the study. Credit: Chapman et al, Proceedings of Science (CC BY NC SA)

The good news is that all work quite well. When astronomers are recovering data which is just at the edge of what a telescope is capable of, their methods are never foolproof. Any one method can always throw up odd results. So the fact that they all broadly agree means that the methods can all be used in parallel – and if they all show the same thing, the scientists can be confident that the things they are observing are real, rather than a mirage caused by their instruments or methods.

The first phase of the SKA is expected to come into operation in 2020, and its immense power will rely on sophisticated data processing techniques such as these to make sense of its data. Cutting edge technology will give the telescope immense power to see previously invisible phenomena. But astronomers sometimes also need to learn how to unsee.

One Response to “The art of unseeing”

- 1

Close

Close

Great piece! Thank you for sharing