Was Fermentation Key to Yeast Diversification?

By Claire Asher, on 17 February 2015

From bread to beer, yeast has shaped our diets and our recreation for centuries. Recent research in GEE shows how humans have shaped the evolution of this important microorganism. As well as revealing the evolutionary origins of modern fission yeast, the new study published in Nature Genetics this month shows how techniques developed for detecting genetic causes of disease in humans can be usefully applied to better understand the ecology, biochemistry and evolution of commercially and scientifically important microorganisms like yeast.

Fission yeast, Schizosaccharomyces pombe, is one of the principal ‘model’ species that cell biologists use to try and understand the inner workings of cells. Most famously, Paul Nurse used this yeast to discover the genes that control cell division. The laboratory strain was first isolated from French wine in 1924, and has been used ever since by an increasingly large community of fission yeast researchers. However, serendipitous collection of new strains has continued slowly since that time, and many of these are associated with human fermentation processes – different strains have been isolated from Sicilian vineyards, from the Brazilian sugarcane spirit Cachaça and from the fermented tea Kombucha. Despite it’s enormous scientific importance, little is known about the ecology and evolution of fission yeast.

Research published this month by Professor Jürg Bähler, Dr Daniel Jeffares and colleagues from UCL’s department of Genetics, Evolution and Environment, along with researchers from 10 other institutions across five countries, reveals an intimate link between historic dispersal and diversification in yeast and our love of fermented food and drinks. The project sequenced the genomes of 161 strains of fission yeast, isolated in 20 countries over the last 100 years, enabling the researchers to reconstruct the evolutionary history of S. pombe, as well as investigating genetic and phenotypic variation within and between strains.

Beer, Wine and Colonialism

Bähler and Jeffares were able to date the diversification and dispersal of S. pombe to around 2,300 years ago, coinciding with the early distribution of fermented drinks such as beer and wine. Strains from the Americas were most similar to each other, and dated to around 1600 years ago, most likely carried across the Atlantic in fermented products by European colonists. This is reminiscent of findings for the common bread and beer yeast species, Saccharomyces cerevisae, whose global dispersal is thought to date to around 10,000 years ago, coinciding with Neolithic population expansions. This research therefore reveals the intimate link between human use of yeast for fermentation and it’s evolutionary diversification, and highlights the power of humans to shape the lives of the organisms with which they interact.

From Genotype to Phenotype

The researchers also used genome-wide association techniques to investigate the relationship between genotype and phenotype in the different strains. They began by carefully measuring 74 different traits in representatives of each strain. Some traits were simple, such as cell size and shape, but the researchers also measured environment-genotype interactions, for example by investigating growth rates and population sizes with different nutrient availabilities, drug treatments and other environment variables. In total, they identified 223 different phenotypes, most of which were heritable to some extent. Further, relatively few of the phenotypes were strongly linked to a particular population or region, making yeast ideal for genome-wide association studies (GWAS), unlike Saccharomyces cerevisae, for which it has not been possible to use GWAS successfully.

GWAS was developed to identify genes that are linked to specific diseases in humans, however this study highlights how the technique can usefully be applied to understanding evolution and genotype-phenotype relationships in other organisms. Tightly controlled experimental conditions that can be achieved with microorganisms in the laboratory make GWAS possible and informative for organisms such as yeast. The researchers found 89 traits that were significantly associated with at least one gene; the strongest association explained about a quarter of variation between individuals.

Hallmarks of Selection

Looking at variation in genomic sequence between strains also allowed the researchers to investigate which parts of the genome have undergone more evolutionary change than others, and which regions are likely to be particularly important for function. Genes and genomic regions that are crucial to survival (such as those involved in basic cellular function, for example), tend to change relatively little over evolutionary time, because most mutations in their sequence would be severely detrimental to survival. A process known as purifying selection tends to keep these genetic sequences the same over long stretches of evolutionary time. Less crucial genetic sequences have more freedom to change without having serious consequences; they are not subject to strong purifying selection and tend to show more variation between individuals and populations.

The authors found that genetic variation between strains was lowest for protein-coding gene sequences (those that produce protein products such as hormones and enzymes), which is to be expected. However, they found variation was also low in non-coding regions near genes. These regions are thought to be important in gene regulation, echoing an increasing appreciation that the evolution of the regulation of gene expression may be as important, if not more so, than the evolution of the gene sequences themselves.

This ground-breaking research from GEE reveals fascinating insights into the ecology and evolution of fission yeast, a microorganism that directly or indirectly influences our lives on a daily basis. It highlights how important humans have been in shaping the genomes of commercially and scientifically important organisms, whilst also expanding our knowledge of genes, genomes and phenotypes more generally. Applying techniques such as this to a wider range of organisms has the potential to vastly increase our understanding of the genomic dynamics of evolutionary change.

Original Article:

This research was made possible by funding from the Wellcome Trust, the European Research Council (ERC), the (BBSRC), the UK Medical Research Council (MRC), Cancer Research UK, the Czech Science Foundation and Charles University.

Planning for the Future – Resilience to Extreme Weather

By Claire Asher, on 15 January 2015

As climate change progresses, extreme weather events are set to increase in frequency, costing billions and causing immeasurable harm to lives and livelihoods. GEE’s Professor Georgina Mace contributed to the recent Royal Society report on “Resilience to Extreme Weather”, which predicts the future impacts of increasing extreme weather events, and evaluates potential strategies for improving our ability to survive, even thrive, despite them.

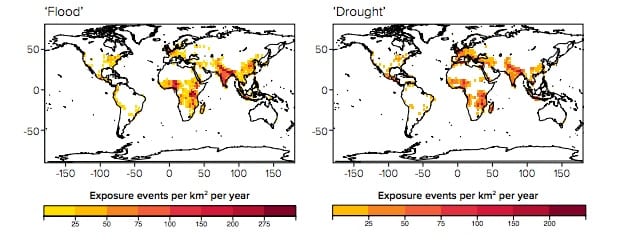

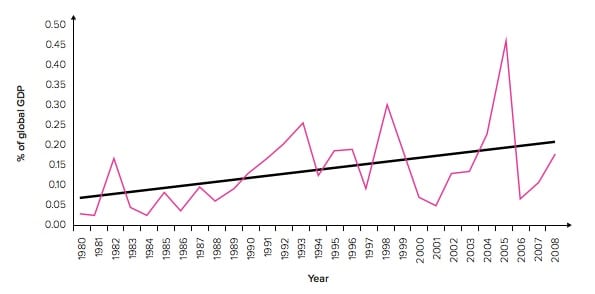

Extreme weather events, such as floods, droughts and hurricanes, are predicted to increase in frequency and severity as the climate warms, and there is some evidence this is happening already. These extreme events come at a considerable cost both to people’s lives, health and wellbeing, and the economy. Between 1980 and 2004, extreme weather is estimated to have cost around US$1.5 trillion, and costs are rising. A recent report by the Royal Society reviews the future risks of extreme weather and the measures we can take to improve our resilience.

The global insured and uninsured economic losses from the two biggest categories of weather-related extreme events. Royal Society (2014)

Disaster risk is a combination of the likelihood of a particular disaster occurring and the impact on people and infrastructure. However, the impact will be influenced not only by the severity of the disaster, but by the vulnerability of the population and its infrastructure, a characteristic we have the potential to change. Thus, while it may be possible to reduce the frequency of disasters by reducing carbon emissions and slowing climate change, another key priority is to improve our own resilience against these events. Rather than just surviving extreme weather, we must adapt and transform.

The risks posed by climate change may be underestimated if exposure and vulnerability to extreme weather are not taken into account. Mapped climate and population projections for the next century show that the number of people exposed to floods, droughts and heatwaves will both increase and become more concentrated.

In their recent report, the Royal Society compared different approaches to increasing resilience to coastal flooding, river flooding, heatwaves and droughts. Overall, they found that a portfolio of defence options, including both physical and social techniques and those that utilise both traditional engineering solutions and more ecosystem based approaches. Broadly, approaches can be categorised as engineering, ecosystem-based, or hybrids of the two. Resilience strategies that incorporate natural ecosystems and processes tend to be more affordable and deliver wider societal benefits as well as simply reducing the immediate impact of the disaster.

Ecosystem-based approaches can take a variety of different forms, but often involve maintaining or improving natural ecosystems. For example … Large, intact tropical forests are important in climate regulation, flood and erosion management and … Forests can also act as a physical defence, and help to sustain livelihoods and provide resources for post-disaster recovery. Ecosystem-based approaches often require a lot of land and can take a long time to become established and effective, however in the long-term they tend to be more affordable and offer a wider range of benefits than engineering approaches. For this reason, they are often called ‘no regret’ options. Evidence for the effectiveness of different resilience strategies is highly varied. Engineered approaches are often well-established, with decades of strong research to back them up. In contrast, ecosystem approaches have been developed more recently and there is less evidence available on their effects. The Royal Society report indicates that for coastal flooding and drought, some of the most affordable and effective solutions are ecosystem-based, such as mangrove maintenance as a coastal defence and agroforestry to mitigate the effects of drought and maintain soil quality. In many situations, hybrid solutions may offer the best mixture of affordability and effectiveness.

It is crucial for governments to develop and implement resilience strategies and start building resilience now. This will be most effective if resilience measures are coordinated internationally, resources shared and where possible, cooperative measures implemented. By directing funds towards resilience-building, governments can reduce the need for costly disaster responses later. Governments can reduce the economic and human costs of extreme weather by focussing on minimising the consequences of infrastructure failure, rather than trying to avoid failure entirely. Prioritising essential infrastructure and focussing on minimising the harmful effects of extreme weather are likely to be the most effective approaches in preparing for future increases in extreme weather events.

Original Article:

Forecasting Extinction

By Claire Asher, on 5 January 2015

Classifying a species as either extinct or extant is important if we are to quantify and monitor current rates of biodiversity loss, but it is rare that a biologist is handy to actually observe an extinction event. Finding the last member of a species is difficult, if not impossible, so extinction classifications are usually estimates based on the last recorded sightings of a species. Estimates always come with some inaccuracy, however, and recent research by GEE academics Dr Ben Collen and Professor Tim Blackburn aimed to investigate how accurate our best estimates of extinction really are.

Using data from experimental populations of the single-celled protist Loxocephalus, as well as wild populations of seven species of mammal, bird and amphibian, the authors tested six alternative estimation techniques to calculation the actual date of extinction. In particular, they were interested in whether the accuracy of these estimates is influenced by the rate of population decline, the search effort put in to find remaining individuals and the total number of sightings of the species. The dataset included very rapid declines (40% a year in the Common Mist frog) and much slower ones (16% per year in the Corncrake), and different sampling regimes.

Their results showed that the speed of decline was a crucial factor affecting the accuracy of extinction estimates – for experimental laboratory populations, estimates were most accurate for rapid population declines, however slow population declines in wild populations tended to produce more accurate results. The sampling regime was also important, with larger inaccuracies occurring when sampling effort decreased over time. This is probably a common situation for many species – close monitoring is common for species of high conservation priority, but interest may decrease as the species becomes closer and closer to extinction. The total number of sightings was also an important factor – a larger number of sightings overall tended to produce more accurate estimates.

Finally, the estimation technique also influenced accuracy, but only in interaction with the other variables mentioned above. Some methods fared best for rapid population declines, others for slower ones. Many of the methods fare poorly when sampling effort changes over time, particularly if it decreases, although they were relatively robust to sporadic, opportunistic sampling regimes. Overall, optimal linear estimation, a statistical method which makes fewer assumptions about the exact pattern of sightings, produced the most accurate results in cases where more than 10 sightings were recorded in total.

This study highlights the challenges faced by ecologists trying to determine whether a species has gone extinct or not. Sightings of rare species are often opportunistic, and only rarely are they part of a systematic, long-term monitoring program. Thus, methods that produce accurate results in the face of changing or sporadic search efforts are of key importance to conservationists. If the history of a species’ population declines and of the sampling effort are known, then statistical estimates can be selected which provide the best estimates for the particular situation. However, this information is rarely available and so using techniques that can provide accurate estimates for a range of different historical scenarios are likely to be of most use in predicting extinction status. Ultimately, it is extremely useful for conservationists to know whether a species is extinct or not, but estimates will always be subject to error except in rare cases (such as the passenger pigeon, for example) where the extinction event is observed first hand. There will always be cases of species turning up years after they were declared extinct, and no estimate will ever be perfect, but understanding the sources of error and the best methods to use to minimise it can be of great benefit in reducing the frequency with which that happens.

This study highlights the challenges faced by ecologists trying to determine whether a species has gone extinct or not. Sightings of rare species are often opportunistic, and only rarely are they part of a systematic, long-term monitoring program. Thus, methods that produce accurate results in the face of changing or sporadic search efforts are of key importance to conservationists. If the history of a species’ population declines and of the sampling effort are known, then statistical estimates can be selected which provide the best estimates for the particular situation. However, this information is rarely available and so using techniques that can provide accurate estimates for a range of different historical scenarios are likely to be of most use in predicting extinction status. Ultimately, it is extremely useful for conservationists to know whether a species is extinct or not, but estimates will always be subject to error except in rare cases (such as the passenger pigeon, for example) where the extinction event is observed first hand. There will always be cases of species turning up years after they were declared extinct, and no estimate will ever be perfect, but understanding the sources of error and the best methods to use to minimise it can be of great benefit in reducing the frequency with which that happens.

Original Article:

This research was made possible by funding from the Natural Environment Research Council (NERC).

Changing Perspectives in Conservation

By Claire Asher, on 18 December 2014

Our views of the importance of nature and our place within have changed dramatically over the the last century, and the prevailing paradigm has profound influences on conservation from the science that is conducted to the policies that are enacted. In a recent perspectives piece for Science, GEE’s Professor Georgina Mace considered the impacts that these perspectives have on conservation practise.

Before the late 1960s, conservation thinking was largely focussed on the idea that nature is best left to its own devices. This ‘nature for itself‘ framework centred around the value of wilderness and unaltered natural reserves. This viewpoint stemmed from ecological theory and research, however by the 1970s it became apparent that human activities were having severe and worsening impacts on species, and that this framework simply wasn’t enough. This led to a shift in focus towards the threats posed to species by human activities and how to reduce these impacts, a ‘nature despite people‘ approach to conservation. This is the paradigm of protected areas and quotas, designed to reduce threats posed and ensure long-term sustainability.

By the 1990s, people had begun to appreciate the many and varied roles that healthy ecosystems play in human-wellbeing; ecosystem services are crucial to providing clean water, air, food, minerals and raw materials that sustain human activities. Shifting towards a more holistic, whole-ecosystem viewpoint which attempted to place economic valuations on the services nature provides, conservation thinking entered a ‘nature for people‘ perspective. Within this framework, conservationists began to consider new metrics, such as the minimum viable population size of species and ecosystems, and became concerned with ensuring sustainable harvesting and exploitation. In the last few years, this view has again shifted slightly, this time to a ‘people and nature‘ perspective that values long-term harmonious and sustainable relationships between humans and nature, and which includes more abstract benefits to humans.

Changing conservation paradigms can have a major impact on how we design conservation interventions and what metrics we monitor to assess their success. Standard metrics of conservation, such as the IUCN classification systems, can be easily applied to both a ‘nature for itself’ and a ‘nature despite people’ framework. In contrast, a more economic approach to conservation requires valuations of ecosystems and the services they provide, which is far more complex to measure and calculate. Even more difficult is measuring the non-economic benefits to human well-being that are provided for by nature. The recent focus on these abstract benefits may make the success of conservation interventions more difficult to assess under this framework.

The scientific tools, theory and techniques available to conservation scientists have not always kept up with changing conservation ideologies, and differing perspectives can lead to friction between scientists and policy-makers alike. In the long-term a viewpoint that recognises all of these viewpoints may be the most effective in directing and appraising conservation management. Certainly, greater stability in the way in which we view our place in nature would afford science the opportunity to catch-up and develop effective and empirical metrics that can be meaningfully applied to conservation.

Original Article:

Function Over Form:

Phenotypic Integration and the Evolution of the Mammalian Skull

By Claire Asher, on 8 December 2014

Our bodies are more than just a collection of independent parts – they are complex, integrated systems that rely upon precise coordination in order to function properly. In order for a leg to function as a leg, the bones, muscles, ligaments, nerves and blood vessels must all work together as an integrated whole. This concept, known as phenotypic integration, is a pervasive characteristic of living organisms, and recent research in GEE suggests that it may have a profound influence on the direction and magnitude of evolutionary change.

Phenotypic integration explains how multiple traits, encoded by hundreds of different genes, can evolve and develop together such that the functional unit (a leg, an eye, the circulatory system) fulfils its desired role. Phenotypic integration could be complete – every trait is interrelated and could show correlated evolution. However, theoretical and empirical data suggest that it is more commonly modular, with strong phenotypic integration within functional modules. This modularity represents a compromise between a total lack of trait coordination (which would allow evolution to breakdown functional phenotypic units) and the evolutionary inflexibility of complete integration. Understanding phenotypic integration and its consequences is therefore important if we are to understand how complex phenotypes respond to natural selection.

It is thought that phenotypic integration is likely to constrain evolution and render certain phenotypes impossible if their evolution would require even temporary disintegration of a functional module. However, integration may also facilitate evolution by coordinating the responses of traits within a functional unit. Recent research by GEE academic Dr Anjali Goswami and colleagues sought to understand the evolutionary implications of phenotypic integration in mammals.

Expanding on existing mathematical models, and applying these to data from 1635 skulls from nearly 100 different mammal species including placental mammals, marsupials and monotremes, Dr Goswami investigated the effect of phenotypic integration on evolvability and respondability to natural selection. Comparing between a model with two functional modules in the mammalian skull and a model with six, the authors found greater support for a larger number of functional modules. Monotremes, whose skulls may be subject to different selection pressures due to their unusual life history, did not fit this pattern and may have undergone changes in cranial modularity during the early evolution of mammals. Compared with random simulations, real mammal skulls tend to be either more or less disparate from each other, suggesting that phenotypic integration may both constrain and facilitate evolution under different circumstances. The authors report a strong influence of phenotypic integration on both the magnitude and trajectory of evolutionary responses to selection, although they found no evidence that it influences the speed of evolution.

Thus, phenotypic integration between functional modules appears to have a profound impact on the direction and extent of evolutionary change, and may tend to favour convergent evolution of modules that perform the same function (e.g bird and bat wings for powered flight), by forcing individuals down certain evolutionary trajectories. The influence of phenotypic integration on the speed, direction and magnitude of evolution has important implications for the study of evolution, particularly when analysing fossil remains, since it can make estimates of the timing of evolutionary events more difficult. Failing to incorporate functional modules into models of evolution will likely reduce their accuracy and could produce erroneous results.

Thus, phenotypic integration between functional modules appears to have a profound impact on the direction and extent of evolutionary change, and may tend to favour convergent evolution of modules that perform the same function (e.g bird and bat wings for powered flight), by forcing individuals down certain evolutionary trajectories. The influence of phenotypic integration on the speed, direction and magnitude of evolution has important implications for the study of evolution, particularly when analysing fossil remains, since it can make estimates of the timing of evolutionary events more difficult. Failing to incorporate functional modules into models of evolution will likely reduce their accuracy and could produce erroneous results.

Phenotypic integration is what holds together functional units within an organism as a whole, in the face of natural selection. Modularity enables traits to evolve independently when their functions are not strongly interdependent, and prevents evolution from disintegrating functional units. Through these actions, phenotypic integration can constrain or direct evolution in ways that might not be predicted based on analyses of traits individually. This can have important impacts upon the speed, magnitude and direction of evolution, and may tend to favour convergence.

Original Article:

This research was made possible by support from the Natural Environment Research Council (NERC), and the National Science Foundation (NSF).

The Best of Both Worlds:

Planning for Ecosystem Win-Wins

By Claire Asher, on 16 November 2014

The normal and healthy function of ecosystems is not only of importance in conserving biodiversity, it is of utmost importance for human wellbeing as well. Ecosystems provide us with a wealth of valuable ecosystem services from food to clean water and fuel, without which our societies would crumble. However it is rare that only a single person, group or organisation places demands on any given ecosystem service, and in many cases multiple stakeholders compete over the use of the natural world. In these cases, although trade-offs are common, win-win scenarios are also possible, and recent research by GEE academics investigates how we can achieve these win-wins in our use of ecosystem services.

Ecosystem services depend upon the ecological communities that produce them and are rarely the product of a single species in isolation. Instead, ecosystem services are provided by the complex interaction of multiple species within a particular ecological community. A great deal of research interest in recent years has focussed on ensuring we maintain ecosystem services into the long term, however pressure on ecosystem services worldwide lis likely to increase as human demands on natural resources soars. Ecosystem services are influenced by complex ecosystem feedback relationships and food-web dynamics that are still relatively poorly understood, and increased pressures on ecosystems may lead to unexpected consequences. Although economical signals respond rapidly to global and national changes, ecosystem services are thought to lag behind, often by decades, making it difficult to predict and fully understand how our actions are influences the availability of crucial services in the future.

Trade-offs in the use of ecosystem services occur when the provision of one ecosystem service is reduced by increased use of another, or when one stakeholder takes more of an ES at the expense of other stakeholders. However, this needn’t be the case – in some scenarios it is possible to achieve win-win outcomes, preserving ecosystem services and providing stakeholders the resources they need. Although attractive, win-win scenarios may be difficult to achieve without carefully planned interventions, and recent research from GEE indicates they are not as common as we might like.

Trade-offs in the use of ecosystem services occur when the provision of one ecosystem service is reduced by increased use of another, or when one stakeholder takes more of an ES at the expense of other stakeholders. However, this needn’t be the case – in some scenarios it is possible to achieve win-win outcomes, preserving ecosystem services and providing stakeholders the resources they need. Although attractive, win-win scenarios may be difficult to achieve without carefully planned interventions, and recent research from GEE indicates they are not as common as we might like.

In a comprehensive meta-analaysis of ecosystem services case studies from 2000 to 2013, GEE academics Prof Georgina Mace and Dr Caroline Howe show that trade-offs are far more common than win-win scenarios. Across 92 studies covering over 200 recorded trade-offs or synergies in the use of ecosystem services, trade-offs were three times more common than win-wins. The authors identified a number of factors that tended to lead to trade-offs rather than synergies. In particular, if one or more of the stakeholders has a private interest in the natural resources available, trade-offs are much more likely – 81% of cases like this resulted in tradeoffs. Furthermore, trade-offs were far more common when the ecosystem services in question were ‘provisioning’ in nature – products we directly harvest from nature such as food, timber, water, minerals and energy. Win-wins are more common when regulating (e.g. nutrient cycling and water purification) or cultural (e.g. spiritual or historical value) ecosystem services are in question. In the case of trade-offs, there were also factors that predicted who the ‘winners’ would be – winners were three times more likely to hold private interest in the natural resource in question, and tended to be wealthier than loosing stakeholders. Overall, there was no generalisable context that predicted win-win scenarios, suggesting that although trade-off indicators may be useful in strategic planning, the outcome of our use of ecosystem services is not inevitable, and win-wins are possible.

They also identified major gaps in the literature that need to be addressed if we are to gain a better understanding of how win-win scenarios may be possible in human use of ecosystems. In particular, case studies are currently only available for a relatively limited geographic distribution, and tend to focus of provisioning services. Thus, the lower occurrence of trade-offs for regulating and cultural ecosystem services may be in part a reflection of a paucity of data on these type of services. Finally, relatively few studies have attempted to explore the link between trade-offs and synergies in ecosystem services and the ultimate effect on human well-being.

They also identified major gaps in the literature that need to be addressed if we are to gain a better understanding of how win-win scenarios may be possible in human use of ecosystems. In particular, case studies are currently only available for a relatively limited geographic distribution, and tend to focus of provisioning services. Thus, the lower occurrence of trade-offs for regulating and cultural ecosystem services may be in part a reflection of a paucity of data on these type of services. Finally, relatively few studies have attempted to explore the link between trade-offs and synergies in ecosystem services and the ultimate effect on human well-being.

Understanding how and why trade-offs and synergies occurs in our use of ecosystem services will be valuable in planning for win-win scenarios from the outset. Planning of this kind may be necessary if we are to achieve and maintain balance in our use of the natural world in the future.

Original Article:

This research was made possible by support from the Ecosystem Services for Poverty Alleviation (ESPA) programme, which is funded by the Natural Environment Research Council (NERC), the Economic and Social Research Council (ESRC), and the UK Department for International Development (ERC)

Life Aquatic:

Diversity and Endemism in Freshwater Ecosystems

By Claire Asher, on 6 November 2014

Freshwater ecosystems are ecologically important, providing a home to hundreds of thousands of species and offering us vital ecosystem servies. However, many freshwater species are currently threatened by habitat loss, pollution, disease and invasive species. Recent research from GEE indicates that freshwater species are at greater risk of extinction than terrestrial species. Using data on over 7000 freshwater species across the world, GEE researchers also show a lack of correlation between patterns of species richness across different freshwater groups, suggesting that biodiversity metrics must be carefully selected to inform conservation priorities.

Freshwater ecosystems are of great conservation importance, estimated to provide habitat for over 125,000 species of plant and animal, as well as crucial ecosystem services such as flood protection, food, water filtration and carbon sequestration. However, many freshwater species are threatened and in decline. Freshwater ecosystems are highly connected, meaning that habitat fragmentation can have serious implications for species, while pressures such as pollution, invasive species and disease can be easily transmitted between different freshwater habitats. Recent work by GEE academics Dr Ben Collen, in collaboration with academics from the Institute of Zoology, investigated the global patterns of freshwater diversity and endemism using a new global-level dataset including over 7000 freshwater mammals, amphibians, reptiles, fishes, crabs and crayfish. Many freshwater species occupy quite small ranges and the authors were also interested in the extent to which species richness and the distribution threatened species correlated between taxonomic groups and geographical areas.

The study showed that almost a third of all freshwater species considered are threatened with extinction, with remarkably little large-scale geographical variation in threats. Freshwater diversity is highest in the Amazon basin, largely driven by extremely high diversity of amphibians in this region. Other important regions for freshwater biodiversity include the south-eastern USA, West Africa, the Ganges and Mekong basins, and areas of Malaysia and Indonesia. However, there was no consistent geographical pattern of species richness in freshwater ecosystems.

Freshwater species in certain habitats are more at risk than others – 34% of species living in rivers and streams are under threat, compared to just 20% of marsh and lake species. It appears that flowing freshwater habitats may be more severely affected by human activities than more stationary ones. Freshwater species are also consistently more threatened than their terrestrial counterparts. Reptiles, according to this study, are particularly at risk from extinction, with nearly half of all species threatened or near threatened. This makes reptiles the most threatened freshwater taxa considered in this analysis. The authors identified key process that were threatening freshwater species in this dataset – habitat degradation, water pollution and over-exploitation are the biggest risks. Habitat loss and degradation is the most common threat, affecting over 80% of threatened freshwater species.

That there was relatively little congruence between different taxa in the distribution of species richness and threatened species in freshwater ecosystems suggests that conservation planning that considers only one or a few taxa may miss crucial areas of conservation priority. For example, conservation planning rarely considers patterns of invertebrate richness, but if these groups are affected differently and in different regions than reptiles and amphibians, say, then they may be overlooked in initiatives that aim to protect them. Further, different ways to measure the health of populations and ecosystems yield different patterns. The metrics we use to identify threatened species, upon which conservation decisions are based, must be carefully considered if we are to suceeed in protecting valuable ecosystems and the services the provide.

Original Article:

This research was made possible by funding from the Rufford Foundation and the European Commission

Handicaps, Honesty and Visibility

Why Are Ornaments Always Exaggerated?

By Claire Asher, on 23 October 2014

Sexual selection is a form of natural selection that favours traits that increase mating success, often at the expense of survival. It is responsible for a huge variety of characteristics and behaviours we observe in nature, and most conspicuously, sexual selection explains the elaborate ornaments such as the antlers of red deer and the tail of the male peacock. There are many theories to explain how and why these ornaments evolve; it may be a positive feedback loop of female preference and selection on males, or ornaments may signal something useful, such as the genetic quality of the male carrying them. One way or another, despite the energetic costs of growing these ornaments, and the increase risk of predation that comes with greater visibility, sexually selected ornaments must be increasing the overall fitness of individuals carrying them. They do so by ensuring the bearer gets more mates and produces more offspring.

Theory predicts that sexually selected traits should be just as likely to become larger and more ostentatious as they are to be reduced, smaller and less conspicuous. However, almost all natural examples refer to exaggerated traits. So where are all the reduced sexual traits?

Runaway Ornaments

Previous work by GEE researchers Dr Sam Tazzyman, and Professor Andrew Pomiankowski has highlighted one possible explanation for this apparent imbalance in nature – if sexually selected traits are smaller, they are harder to see. Using mathematical models, last year they showed that differences in the ‘signalling efficacy’ of reduced and exaggerated ornaments was sufficient to explain the bias we see in nature. Since the purpose of sexually selected ornaments is to signal something to females, if reduced traits tend to be worse at signalling, then it makes sense that they would rarely emerge in nature. Their model covered the case of runaway selection, whereby sexually selected traits emerge somewhat spontaneously due to an inherent preference in females. It goes like this – if, for whatever reason, females have an innate preference for a certain trait in males, then any male who randomly acquires this trait will get more mates and produce more offspring. Those offspring will include males carrying the trait and females with a preference for the trait, and over time this creates a feedback loop that can produce extremely exaggerated traits. Under this model of sexual selection, differences in the signalling efficacy can be sufficient to explain why we so rarely see reduced traits.

Handicaps

However, this is just one model for how sexually selected ornaments can emerge, so this year GEE Researchers Dr Tazzyman and Prof Pomiankowski, along with Professor Yoh Iwasa from Kyushu University, Japan, have expanded their research to include another possible explanation – the handicap hypothesis. According to the handicap principle, far from being paradoxical, sexually selected ornaments may be favoured exactly because they are harmful to the individual who carries them. In this way, only the very best quality males can cope with the costs of carrying huge antlers or brightly coloured feathers, and so the ornament acts as a signal to females indicating which males carry the best genes. This is an example of honest signalling – the ornament and the condition or quality of the carrier are inextricably linked, and there is no room for poor quality males to cheat the system.

However, this is just one model for how sexually selected ornaments can emerge, so this year GEE Researchers Dr Tazzyman and Prof Pomiankowski, along with Professor Yoh Iwasa from Kyushu University, Japan, have expanded their research to include another possible explanation – the handicap hypothesis. According to the handicap principle, far from being paradoxical, sexually selected ornaments may be favoured exactly because they are harmful to the individual who carries them. In this way, only the very best quality males can cope with the costs of carrying huge antlers or brightly coloured feathers, and so the ornament acts as a signal to females indicating which males carry the best genes. This is an example of honest signalling – the ornament and the condition or quality of the carrier are inextricably linked, and there is no room for poor quality males to cheat the system.

Using mathematical models, the authors investigated four possible causes for the absence of reduced sexual ornaments in the animal kingdom. Firstly, like the case of runaway selection, differences in signalling efficacy might explain the bias. Under the handicap hypothesis, ornaments act as signals of genetic quality, so it would be little surprise that their visibility or effectiveness at conveying the signal would be important. Smaller ornaments may simply be worse at attracting the attention of females, meaning that the benefits of the sexual ornament don’t outweigh the costs. Similarly, the costs for females of preferring males with reduced ornaments may be higher than for exaggerated ornaments, because it is easier to find males with exaggerated traits. Again, this could theoretically tip the balance of cost and effect away from selecting for reduced ornaments. An alternative explanation is that the costs of the ornament itself are different for reduced and exaggerated traits. This seems quite likely in many cases, since a large ornament would require more resources to grow. But in this case selection would be more likely to produce reduced ornaments with lower costs! In order to replicate the excess of exaggerated traits we see in nature, reduced traits would have to cost more – much less biologically plausible. However, if large ornaments tend to be more costly, then they may be more likely to be condition-dependent, a key tenant of the handicap principle. Exaggeration may be more effective at producing honest signalling and exaggerated traits may therefore be more useful to females as a signal of quality.

Honest Signals

The results of modelling highlighted two key ways in which exaggerated traits might be favoured by the handicap process. In the model, when exaggerated traits have a higher signalling efficacy or are more strongly condition-dependent, exaggerated traits tend to be more extreme than reduced traits. The model still predicts that reduced traits are equally likely to evolve, just that they will tend to be less extreme examples of ornamentation. The other two possible explanations – higher costs to females that prefer small ornaments or the males that carry them, failed to consistently produce the observed lack of reduced ornaments. Both explanations were able to produce this outcome under certain circumstances, but in other circumstances they produced the opposite effect. Exaggerated ornaments, therefore, may be more common because they are more effective signals that are more likely to be honest.

Based on this and previous work by Dr Tazzyman and colleagues, asymmetries in the signalling efficacy of reduced and exaggerated traits is sufficient to explain the lack of reduced traits in nature. Whether ornaments evolve via runaway selection or the handicap process, asymmetrical signalling efficacy tends to favour exaggerated traits. However, in the case of the handicap process, asymmetries in condition-dependance of the trait may also be involved. These two explanations are not mutually exclusive, and it is likely that in reality many factors are involved.

Importantly, for both explanations and for both type of sexual selection, the models still predict that exaggeration and reduction will be equally likely. The differences emerge in terms of how extreme the ornament will become. Thus, this work predicts that there are many examples of reduced ornaments in nature, perhaps we just haven’t found them yet. This is especially likely if reduced traits that might be less noticeable anyway also tend to be less extreme. Alternatively, there may be other asymmetries not yet considered that make reduced ornaments less likely to emerge in the first place.

Where Next?

The authors suggest some very interesting avenues for future research. Firstly, they suggest that more complex models investigating how multiple different asymmetries may act together to produce sexually selected ornaments will get us closer to understanding the intricate dynamics of sexual ornamentation. Secondly, these models have only considered cases where evolution of the trait eventually settles down – at a certain ornament size, the costs and benefits of possessing it are equal, and the trait should remain at this size. However, in some cases the dynamics are more complex, and traits undergo cycles of exaggeration and reduction. Research into the impact of asymmetries in condition-dependence and signalling efficacy in these ‘nonequilibrium’ models would yield fascinating insights into the evolution of sexual ornaments.

Original Article:

This research was made possible by funding from the Natural Environment Research Council (NERC), the Engineering and Physical Sciences Research Council (EPSRC), the European Research Council (ERC)

PREDICTS Project: Land-Use Change Doesn’t Impact All Biodiversity Equally

By Claire Asher, on 13 October 2014

Humans are destroying, degrading and depleting our tropical forests at an alarming rate. Every minute, an area of Amazonian rainforest equivalent to 50 football pitches is cleared of its trees, vegetation and wildlife. Across the globe, tropical and sub-tropical forests are being cut down to make way for expanding towns and cities, for agricultural land and pasture and to obtain precious fossil fuels. Even where forests remain standing, hunting and poaching are stripping them of their fauna, degrading the forest in the process. Habitat loss and degradation are the greatest threats to the World’s biodiversity. New research from the PREDICTS project investigates the patterns of species’ responses to changing land-use in tropical and sub-tropical forests worldwide. In the most comprehensive analysis of the responses of individual species to anthropogenic pressures to date, the PREDICTS team reveal strong effects of human disturbance on the geographical distribution and abundance of species. Although some species thrive in human-altered habitats, species that rely on a specific habitat or diet, and that tend to have small geographical ranges, are particularly vulnerable to habitat disturbance. Understanding the intricacies of how different species respond to different types of human land-use is crucial if we are to implement conservation policies and initiatives that will enable us to live more harmoniously with wildlife.

Habitat loss and degradation causes immediate species losses, but also alters the structure of ecological communities, potentially destabilising ecosystems and causing further knock-on extinctions down the line. As ecosystems start to fall apart, the valuable ecosystem services we rely on may also dry up. There is now ample evidence that altering habitats, particularly degrading primary rainforest, has disastrous consequences for many species, however not all species respond equally to land-use change. The functional traits of species, such as body size, generation time, mobility, diet and habitat specificity can have a profound impact on how well a species copes with human activities. The traits that make species particularly vulnerable to human encroachment (slow reproduction, large body size, small geographical range, highly specific dietary and habitat requirements) are not evenly distributed geographically. Species possessing these traits are more common in tropical and sub-tropical forests, areas that are under the greatest threat from human habitat destruction and loss of vegetation over the coming decades. The challenge in recent years, therefore, has been developing statistical models that allow us to investigate this relationship more precisely, and collecting sufficient data to test hypotheses.There are three key ways we might chose to investigate how species respond to land-use change. Many studies have investigated species-area relationships, which model the occurrence or abundance of species in relation to the size of available habitat. These studies have revealed important insights into the damage caused by habitat fragmentation, however they rarely consider how different species respond differently. Another common approach uses species distribution models to predict the loss of species in relation to habitat and climate suitability. These models can be extremely powerful, but require large and detailed datasets that are not available for many species, particularly understudied creatures such as invertebrates. The PREDICTS team therefore opted for a third option to investigate human impacts on species. The PREDICTS project has collated data from over 500 studies investigating the response of individual species to land-use change, and their database now includes over 2 million records for 34,000 species. Using this extensive dataset, the authors were able to model the relationship between land-use type and both the occurrence and abundance of species. One of the huge benefits of this approach is that their dataset enabled them to investigate these relationships in a wide range of different taxa, including birds, mammals, reptiles, amphibians and the often neglected invertebrates.

Modelling Biodiversity

In general human-dominated habitats, such as urban and cropland environments, tended to harbour fewer species than more natural, pristine habitats. Community abundance in disturbed habitats was between 8% and 62% of the abundance found in primary forest, and urban environments were consistently the worst for overall species richness. In these environments, human population density and a lack of forest cover were key factors reducing the number of species. Human population density could impact species directly through hunting, or more indirectly through expanding infrastructure. However, these factors impact different species in different ways, so the authors next investigated different taxa separately.

Birds appear to be particularly poor at living in urban environments, most likely because they respond poorly to increases in human population. Forest specialists and narrow-ranged birds fare especially badly in urban environments; only 10% of forest specialists found in primary forest are able to survive in urban environments. Although the effect was less extreme, mammals were also less likely to occur in secondary forest and forest plantations than primary forest, and forest specialists were particularly badly affected.

Urban Pests

Although many species were unable to exist in disturbed habitats, those species that persisted were often more abundant in human-modified habitats than pristine environments. This isn’t particularly surprising – some species happen to possess characteristics that make them well suited to urban and disturbed landscapes; these are often the species that we eventually start to consider a pest because they are so successful at living alongside us (think pigeons, rats, foxes). These species tend to be wide-ranging generalists, although sometimes habitat specialists do well in human-altered habitats if we happen to alter the habitat in just the right. Pigeons, for example, are adapted to nesting on cliffs, which our skyscrapers and buildings inadvertently mimic extremely well. The apparent success of some species in more open habitats such as cropland and urban environments might also be partly caused by increased visibility – it’s far easier to see a bird or reptile in an urban environment than dense primary forest! This doesn’t explain the entire pattern, however, and clearly some species are simply more successful in human-altered habitats. They are in the minority, though.

Do Reptiles Prefer Altered Habitats?

One interesting finding was that for herptiles (reptiles and amphibians), more species were found in habitats with a higher human population density. This rather unexpected relationship might reflect a general preference in herptiles for more open habitats. Consistent with this, the authors found fewer species in pristine forest than secondary forest. However, upon closer inspection the authors found that herptiles do not all respond in the same way. Reptiles showed a U-shaped relationship with human population density – the occurrence of species was highest when there were either lots of people or no people at all. By contrast, amphibians showed a straight relationship, with increases in human population density being mirrored by increases in the number of species present. This highlights the importance of investigating fine-scale differences between species in their responses to human activities.

This study is the first step in more detailed, comprehensive analyses of the responses of species to human activities. The power of this study comes not only from it’s large dataset and broad spectrum of taxonomic groups, but also from it’s ability to directly couple land-use changes with species’ traits such as range-size and habitat specialism. The authors say that the next major step would be to incorporate interactions between species in these models – the community structure of an ecosystem can have profound effects on the species living in it, and changes in the abundance of any individual species does not happen in isolation from the rest of the community.

Check out the PREDICTS Project for more information!

Original Article:

This research was made possible by funding from the Natural Environment Research Council (NERC), and the Biotechnology and Biological Sciences Research Council (BBSRC).

Calculated Risks:

Foraging and Predator Avoidance in Rodents

By Claire Asher, on 3 October 2014

Finding food is one of the most important tasks for any animal – most animal activity is focused on this job. But finding food usually involves some risks – leaving the safety of your burrow or nest to go out into a dangerous world full of predators, disease and natural hazards. Animals should therefore be expected to minimise these risks as much as possible – foraging at safer times of day, especially when there’s lots of food around anyway. This hypothesis is known as the “risk allocation hypothesis”, but it has rarely been tested in wild animals. Recent research from ZSL academic Dr Marcus Rowcliffe showed that the behaviour of the Central America agouti certainly seems to follow this pattern, and highlights the amazing plasticity of animal behaviour.

Foraging, although essential, is always a compromise between finding food and avoiding being eaten by a predator. The aim of the game is to eat as much as you can whilst avoiding being eaten yourself, in order to live long enough and grow large enough to reproduce. Since finding food is one of the most important things an animal has to do, foraging behaviour has been subject to strong natural selection.The risk allocation hypothesis predicts that prey species should focus their foraging effort at times of day that pose the least risk. So, if your main predator is active during the day, you best forage at night and vice versa. There ought to be some flexibility in this system too, though – if food in your habitat is plentiful, it should be easy to find enough to fill you and there is little need to take any additional risks. Conversely, if food is pretty scarce, you may be forced to take more risks than usual by foraging for longer or at more dangerous times of day.

In a recent study, academics from the Institute of Zoology, London, in collaboration with colleagues around the world, investigated this trade off between food and predator avoidance in the Central American Agouti (Dasyprocta punctata). The agouti’s biggest problem in life is the Ocelot (Leopardus pardalis), who primarily feed on agoutis. Using radio telemetry and camera trapping, the researchers investigated activity patterns of agouti living in areas with lots of Astrocaryum fruits, and those living in areas with less. They were able to generate an enormous dataset – over 30,000 camera trap records of agoutis, with a further 50 individuals radio collared and tracked!

Ocelots are highly nocturnal, and across nearly 500 camera trap observations, Ocelots were almost exclusively observed at night. During this time, agoutis were under a great deal of risk – the predation risk from Ocelots was estimated to be four orders of magnitude higher between dusk and dawn than during daylight hours. The foraging activity of agouties mirrored this – activity was highest during the day, with peaks first thing in the morning and again later in the afternoon. Most interestingly, these patterns differed for agoutis that lived in habitats with abundant fruit and habitats where fruit was sparse. When food availability was high, agoutis took fewer risks, leaving their burrows later in the morning and coming home again earlier at the end of the day. Overall their activity levels were lower, presumably because they didn’t need to forage for as long to find all the food they needed.The results of this study support the risk allocation hypothesis, and show that animals are able to make complex calculations about risks and benefits based upon environmental conditions and alter their behaviour so as to minimise risks and maximise benefits. Only when food availability is high can agoutis afford to have a lie-in and avoid any ocelots returning home late.

Original Article:

This research was made possible by funding from the National Science Foundation (NSF) and the Netherlands Foundation for Scientific Research

Close

Close