#LearnHack 7 reflections

By Geraldine Foley, on 8 February 2024

On the weekend 26 – 28 January I helped to facilitate and took part in the seventh iteration of #LearnHack.

#LearnHack is a community hackathon organised by an interdisciplinary UCL team. The original event was held in November 2015 in collaboration with UCL Innovation and Enterprise at IDEALondon. The 2024 version was the first time it has been run as a hybrid event. It was held over the weekend of 26-28 January in the School of Management department at Canary Wharf in collaboration with the Faculty of Engineering, Digital Education and UCL Changemakers. Participants came from 12 different UCL departments, alumni, and external guests from Jisc. Everyone was invited to submit project proposals for how to improve UCL based on pre-agreed themes. The themes this year were AI and Assessment with overlap between the two.

Being fairly new to UCL I had not come across this event before, but when I was told about the ethos behind it which is to empower a community of staff, students, researchers and alumni to tackle challenges collaboratively and creatively, it sounded right up my street. I am a big advocate of playful learning and creating a safe space for experimentation and failure. I also liked the interdisciplinary approach which encourages people from all backgrounds to work together and learn from each other. Anyone with a valid UCL email address can submit a project proposal to be worked on over the weekend and anyone can run a learning session to share their skills or ideas with participants. Everyone is encouraged to attend welcome talks on the Friday evening to hear about the different projects and get to know each other and form teams. Participants have the weekend to work on their chosen project and also take part in learning sessions.

I’m always up for a challenge, so I not only put forward a project proposal and ran a learning session, but I also helped to facilitate the online attendees on the Friday evening and Saturday morning. This meant it was a packed weekend and I got to experience all the different elements of #LearnHack, including joining online on the second day.

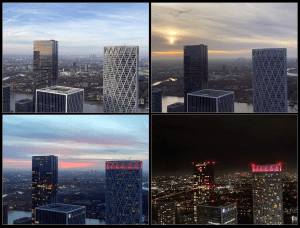

The venue was amazing, with great views of London, and the School of Management spaces were perfect for collaboration and hybrid events. The learning sessions were great, I particularly enjoyed learning how to use Lumi and GitHub to create and host H5P activities outside of Moodle so that they can be shared externally. I also found out about the game that ARC had devised for engineers and developers to learn about the issues associated with generative AI where players can help prevent or create an AI Fiasco.

My own session on making a playful AI chatbot was run online but many people joined from the room. The session encouraged people to experiment with different types of chat bots and have a go at creating their own. We managed to create some interesting applications in the short time we had including a bot that accurately answered questions on using Moodle, Zoom and Turnitin. We also explored how a bot’s personality can impact a user’s interactions and perceptions on the accuracy of its responses and had some interesting discussions on some of the ethical issues involved with users uploading material to datasets.

In-between games, food and learning sessions, teams worked on five different projects. I was impressed with all the project teams and the work they managed to produce in such a short space of time. The winning team stood out in particular, as they created a working prototype using ChatGPT. Their project aims to reduce the time that medical science students spend manually searching through articles looking for replicable research. This team now have Student ChangeMaker funding to create an optimiser to filter through biomedical research papers and extract quality quantitative methods. It is hoped that the ‘protocol optimiser’ will streamline workflows for researchers and students to find suitable lab work. I am looking forward to following the development of their project and hopefully they will report back at a changemaker event later in the year.

Despite smaller numbers of attendees than hoped, feedback from participants was positive with calls to raise awareness amongst the student population with promotion in freshers’ week and from careers to encourage students to join. Personally, I had a great time, although next time I wouldn’t try to do quite so much and would either stick to being involved in a project or helping to facilitate and run sessions. The Faculty of Engineering has already given the go ahead for #LearnHack8 and we are currently exploring possibilities with running some mini #LearnHack events before then, so watch this space for more details and if you have an idea for a project then get in touch.

Close

Close